Here you will find a concise overview of the EU AI Act as a practical guide to responsible AI governance and compliance with embedded links to the official EU AI Act text where appropriate.

Get your organization ready for the upcoming AI regulations around the globe that will guide the ethical development and deployment of these cutting-edge technologies. Equip your team with best practices to build solid AI governance structures, carry out thorough risk evaluations, ensure effective human oversight, and promote clarity while reducing exposure to legal challenges and fines.

To read the neatly arranged EU AI Act in full, visit our EU AI Act Resources or find a personalized breakdown of the sections that apply to your organization with Whisperly’s AI Act Compliance Checker.

Content

EU AI Act Summary in 5 Points

1. Global Regulatory Reach

The EU AI Act is the world’s most comprehensive AI legislation with global impact, applying to all AI systems and general-purpose AI models (GPAIs) available in or affecting the EU market, regardless of provider location, similar to GDPR.

2. Risk-Based Framework

AI systems are categorized into four risk levels: unacceptable, high, limited, and minimal, with stringent obligations mostly for high-risk AI, including mandatory conformity assessments, detailed documentation, transparency, human oversight, and cybersecurity.

3. Clear Obligations by Role

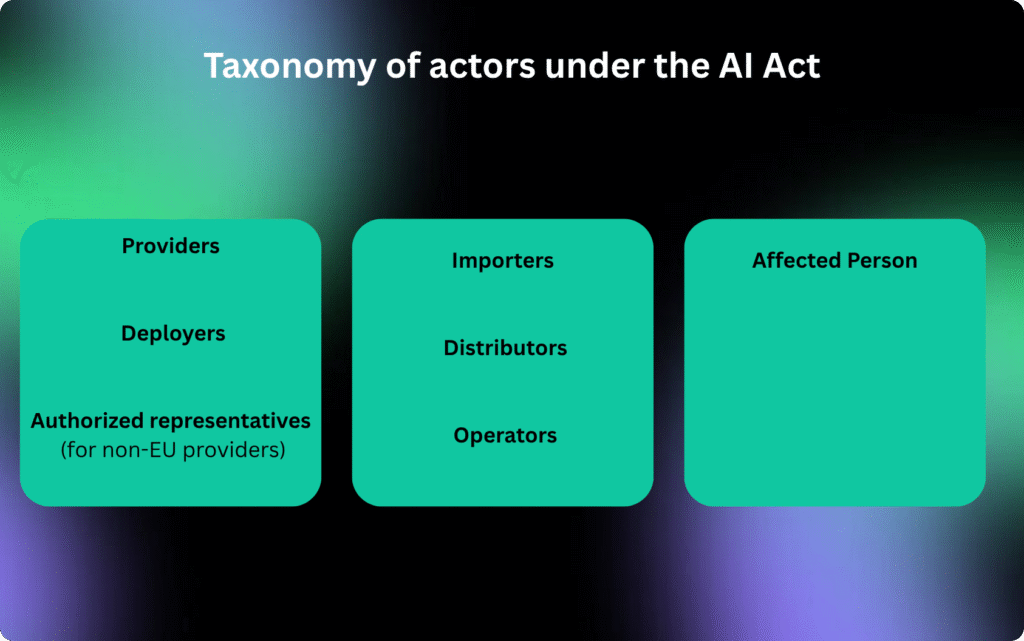

Providers, importers, distributors, deployers, and authorized representatives each have distinct responsibilities, such as system validation, documentation, market registration, operational oversight, and mandatory incident reporting.

4. EU AI Act Timeline

The EU AI Act entered into force on 1 August 2024, initiating a structured compliance timeline:

- prohibitions against unacceptable-risk AI systems and general rules become enforceable from 2 February 2025

- governance measures, notifications, confidentiality, and GPAI obligations, along with most penalties, follow on 2 August 2025

- Full enforcement covering all general compliance measures commences on 2 August 2026

- culminating with mandatory compliance for high-risk AI systems (including pre-market assessments and system registrations) and existing GPAI models by 2 August 2027.

5. Significant Penalties for Non-compliance

Violations may incur fines up to €35 million or 7% of global turnover for prohibited practices and €15 million or 3% for high-risk system breaches, reinforcing the importance of rigorous compliance.

What is the EU AI Act?

The European Union’s Artificial Intelligence Act is the most ambitious and comprehensive regulatory framework for artificial intelligence in the world. The EU AI Act is a regulation, meaning it has uniform legal force across all EU member states without requiring national legislation to implement it, and establishes rules and obligations directly binding on organizations developing, deploying, or using AI systems within or impacting the EU market. This approach ensures standardized AI governance and enforcement of AI standards across the entire European Union, providing clarity and legal certainty for businesses while safeguarding citizens’ fundamental rights and interests through uniform, transparent, and enforceable rules.

The scope of the EU AI Act goes beyond EU-headquartered entities. It employs the same “extraterritorial” reach popularized by the GDPR: regardless of where your organization has a corporate presence, if you place an AI system on the EU market or make it available for use within EU borders, you are bound by its requirements.

The EU AI Act’s Role in International AI Governance – New Global Benchmark

By introducing the EU AI Act, Europe isn’t just setting its own rules, it’s aiming to ignite a global conversation on what responsible AI governance looks like. Designed as an example, this law not only creates a single European AI governance system but invites other countries to adopt its approach as they shape and align their own AI regulations. For more information on the development of the pioneering responsible AI development in Europe see here.

So what do we mean by “AI governance,” anyway? Imagine it as the strategic compass that guides an organization through every phase of AI’s lifecycle, from development and deployment to ongoing oversight. It blends legal requirements, corporate policies, best-practice frameworks, and international standards into a cohesive toolkit, empowering everyone from engineers at the keyboard to executives in the boardroom to build, launch, and steer AI systems with both creativity and caution.

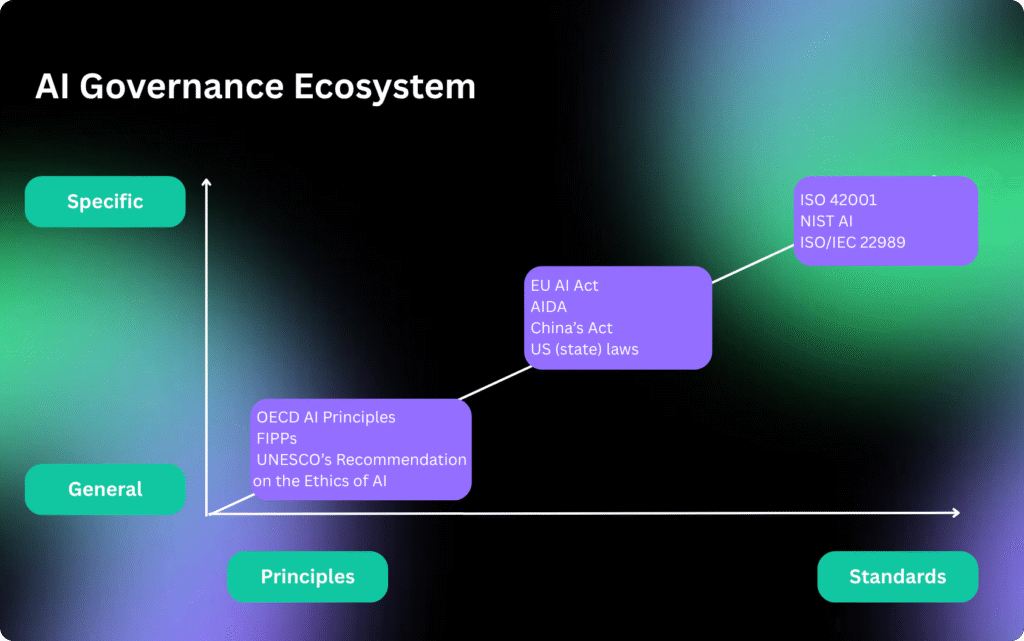

Two pillars hold up any robust AI governance regime: principles and frameworks.

Principles of AI Governance

Principles of AI governance are universally recognized standards intended to promote fairness, transparency, accountability, and respect for human rights in the development and use of artificial intelligence. Although the specific language used varies, prominent international guidelines consistently embody these fundamental ethical values and serve as widely accepted global benchmarks.

Most notable examples are:

Frameworks for AI Governance

If principles set the destination, frameworks map out the route. They provide the step-by-step guidance organizations need to translate lofty values into concrete policies, processes, and controls. Although many frameworks share common goals, each is tailored to a particular context or industry:

- ISO 42001 (Artificial intelligence management systems)

- ISO/IEC 22989:2022 (AI concepts and terminology)

- NIST AI Risk Management Framework (U.S.).

For a deeper dive into how different jurisdictions are legislating AI, complete with status updates and key provisions, check out the IAPP’s Global AI Legislation Tracker.

Ready to get started ?

Build trust, stay on top of your game and comply at a fraction of a cost

What does the EU AI Act Regulate?

Encompassing 180 recitals, 13 Annexes, and 113 articles, the EU AI Act adopts a risk-based framework to oversee every stage in the lifecycle of various AI systems.

It adopts a four-tier, risk-based classification for AI systems:

a. Unacceptable risk: AI systems whose operation is deemed contrary to EU values and fundamental rights. These systems are prohibited.

b. High risk: AI systems that pose significant threats to health, safety or fundamental rights. This includes AI used as safety components in products covered by existing EU law (Annex I) and a defined list of critical use cases (Annex III).

c. Limited risk: AI systems that interact directly with humans or generate synthetic content such as chatbots, deepfake generators, or emotion recognition tools.

d. Minimal risk: The vast majority of AI applications such as spam filters, AI-enabled video games, basic recommendation engines, etc. considered to pose little to no risk.

Most obligations apply to high-risk AI systems, while limited-risk obligations mainly concern transparency. Minimal-risk AI systems are generally free from additional regulatory requirements, though providers are encouraged to follow voluntary codes of conduct and adhere to overarching EU principles on human oversight, non-discrimination, and data protection.

Determine the risk for each of your AI systems with our AI Act Checker

The EU AI Act casts a wide, extraterritorial net, meaning very few AI systems are wholly exempt.

Those that fall outside the scope are:

- AI systems in private, non-commercial use (for example, hobby projects kept entirely offline) though even here, built-in transparency rules for “limited risk” systems (think chatbots or deepfake tools) may still apply.

- Open-source models released without commercial intent are not deemed “placed on the market,” but once they power a service offered in the EU, that service becomes subject to the AI Act in the EU. Also, the exception applies only if they are not prohibited or classified as high-risk AI systems.

There are also three very limited exceptions:

- Scientific research & development: AI systems (and their outputs) that are specifically developed and put into service solely for scientific research and development.

- Testing before market placement: Systems used exclusively to test an AI system before it is placed on the market.

- Military/defense applications: AI developed or used exclusively for military, defense, or national security purposes.

Otherwise, the EU AI Act applies to all EU organizations as well as to organizations outside the EU that develop, deploy, place on the market, or use AI systems whose outputs affect individuals within the Union.

If you are unsure whether your AI system lies beyond the EU AI Act’s reach, try our AI Act Checker for a quick assessment.

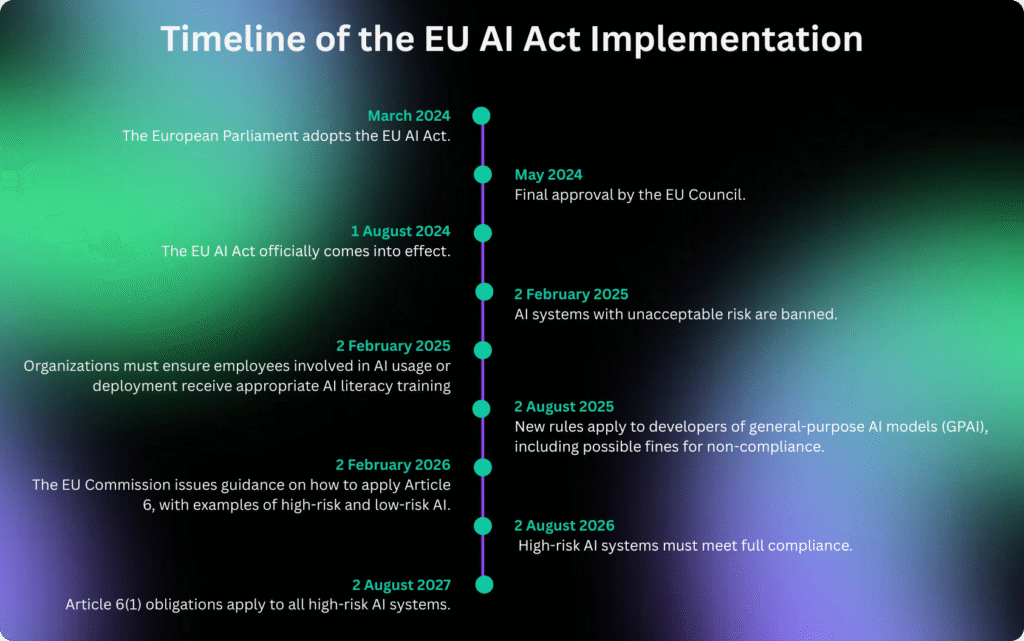

EU AI Act Timeline – Key Dates You Can't Miss

When did the EU AI Act enter into force?

On 12 July 2024, the EU AI Act was published in the Official Journal of the European Union and entered into force on 1 August 2024.

Compliance deadlines then stagger over a period of six to thirty-six months, beginning on 2 August 2024. Until the 2nd of February 2025, there were no mandatory obligations, but voluntary compliance was encouraged (Recital 178).

What Applies Next?

Although the EU AI Act as a whole takes effect on 2 August 2026, some sections have different effective dates:

- General rules, definitions, and bans on certain AI uses became enforceable on 2 February 2025

- Obligations such as notifications, governance measures, GPAI model rules, confidentiality, and most penalties kick in on 2 August 2025, although providers who placed GPAI models on the EU market before that date have until 2 August 2027 to comply

- The requirements of Art. 6 (the high-risk AI provisions) become binding on 2 August 2027.

When do prohibited practice obligations under the EU AI Act go into effect?

The application of prohibited practice obligations under the EU AI Act began on 2 February 2025.

What is the Definition of an AI System under the EU AI Act?

The EU AI Act defines an AI system as:

“a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.”

This definition is aligned with the definition provided by the OECD for the term.

So, there are four key elements of this definition:

- Machine-based system: any software (or combination of software and hardware) that processes data algorithmically

- Varying levels of autonomy: may require no human intervention or may work under human oversight

- Adaptiveness after deployment: can learn or update its behavior over time, based on new data

- Inference-based outputs: generates predictions, classifications, recommendations, content or decisions from inputs.

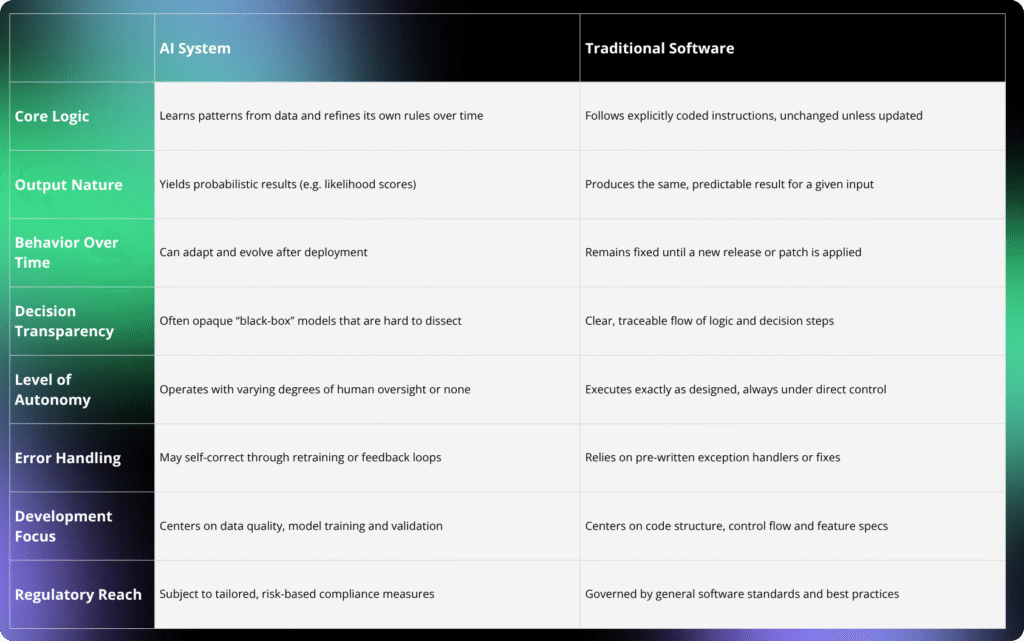

Below is a summary table highlighting the principal distinctions between AI systems and traditional software across multiple key dimensions.

General Purpose AI (GPAI) under the EU AI Act

The EU AI Act describes general-purpose AI models in terms of their broad applicability and ability to carry out a diverse array of tasks with competence. According to the definition of the GPAI models, key features include:

- Extensive, versatile training: They are built on massive datasets using techniques like self-supervised, unsupervised or reinforcement learning.

- Multiple distribution channels: They can be offered via code libraries, APIs, direct downloads or even as physical media.

- Adaptable architecture: They can be further refined or fine-tuned into new variants.

- AI Model vs. AI system distinction: While these models underpin AI systems, on their own they are not complete systems.

- Scale indicator: One benchmark of generality is size models with on the order of a billion parameters or more, trained at scale via self-supervision, are presumed to exhibit substantial versatility

When the provider of a general-purpose AI model integrates its own model into its own AI system that is made available on the market or put into service, that model should be considered to be placed on the market and, therefore, the obligations in the EU AI Act for models should continue to apply in addition to those for AI systems. The obligations laid down for models should in any case not apply when an own model is used for purely internal processes that are not essential for providing a product or a service to third parties and the rights of natural persons are not affected.

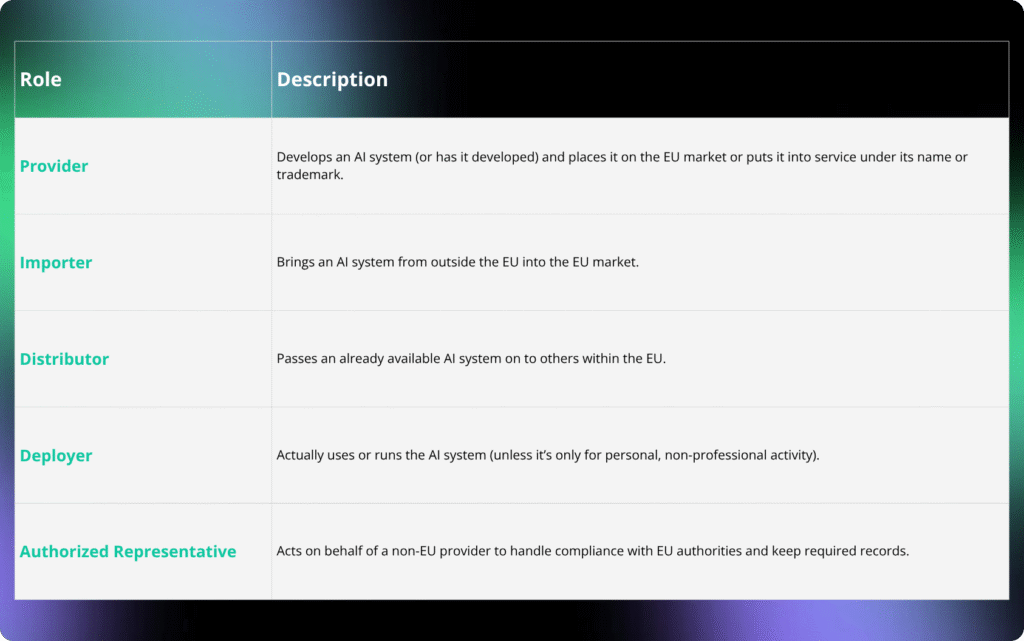

How to Determine Your Role under the EU AI Act?

Under the EU AI Act, organizations must identify their specific role concerning each AI system because obligations vary according to the role.

For a deeper dive into each role, refer to the Who Has To Comply with the EU AI Act.

Determine your role for each of your AI systems with our AI Act Checker.

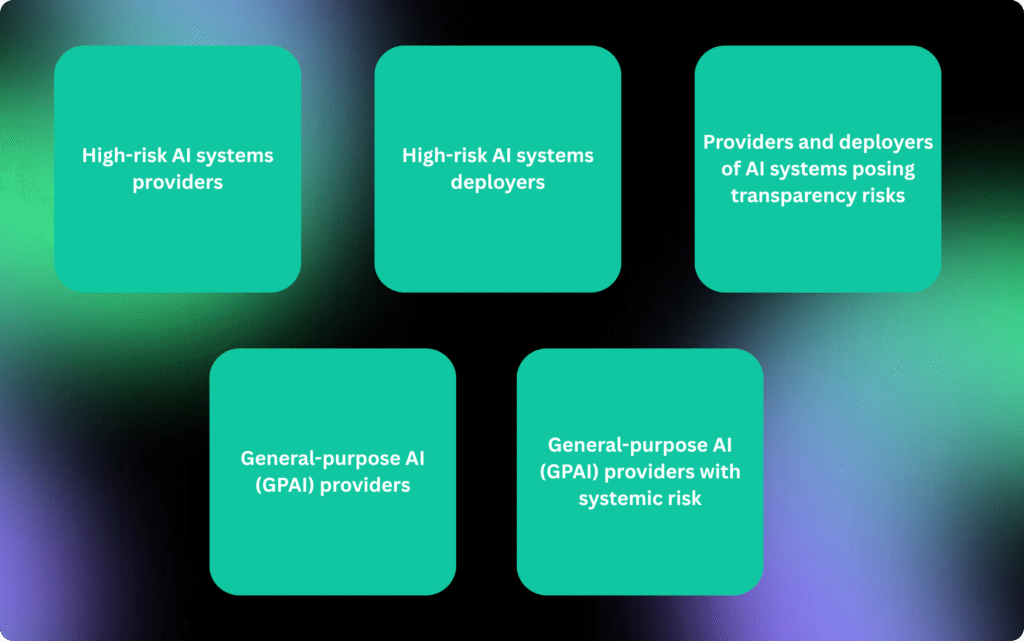

What Organizations Must Do under the EU AI Act?

Under the EU AI Act, an organization’s list of obligations varies according to its role in the AI lifecycle (e.g., provider, importer, deployer), the type of AI technology involved (e.g., AI system or general-purpose AI model), and the system’s risk classification (unacceptable, high-risk, limited-risk, or minimal-risk).

Most obligations of the EU AI Act relate to the following groups of organizations and AI systems.

Prohibited AI Systems

Under the EU AI Act, certain AI technology is banned outright. Therefore, any organization that provided or deployed a prohibited AI system was required to halt all related activities by 2 February 2025.

AI that covertly manipulates people’s behaviour, exploits vulnerable groups or assigns “social scores” based on activity or traits is considered prohibited. Also, systems that profile individuals’ criminal risk without factual evidence, scrape facial images indiscriminately, or infer emotions in sensitive settings (like schools or workplaces) are also prohibited.

Likewise, categorizing people by sensitive attributes (such as race, religion, political views or sexual orientation) using biometric data, and conducting real-time, remote biometric identification in public spaces, except under very narrow, strictly regulated law-enforcement scenarios, are forbidden.

You may find the entire list of prohibited AI Systems in Article 5 of the EU AI Act.

Determine whether your AI system is prohibited with our AI Act Checker.

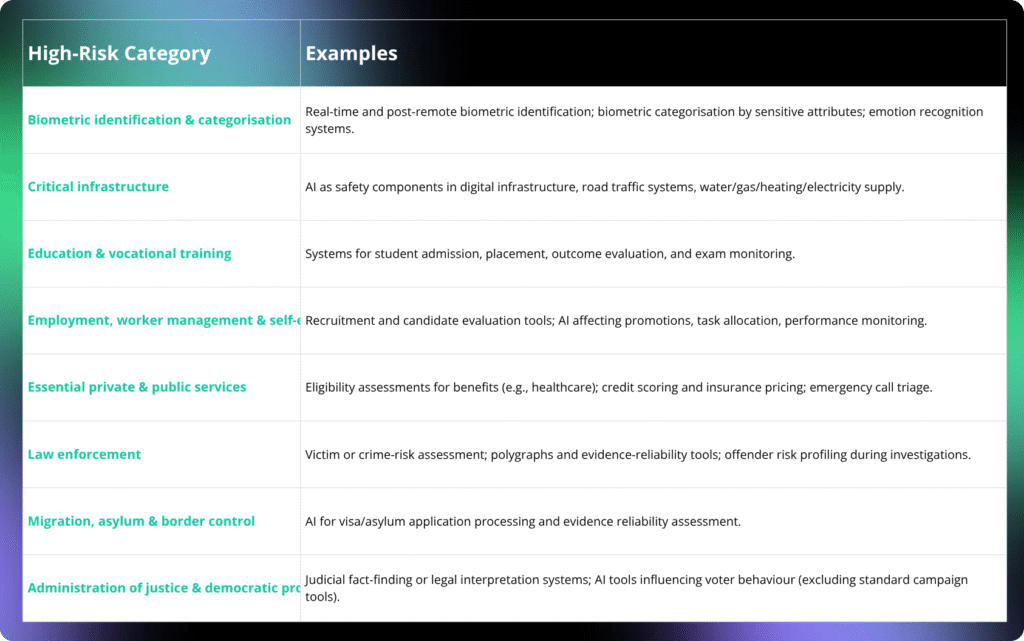

High-Risk AI systems

a. Which AI Systems are classified as High-Risk?

Under the EU AI Act, AI applications that could substantially affect individuals’ rights, safety, or well-being are designated as high-risk and must meet enhanced regulatory requirements. Below is an overview of the seven main high-risk categories:

AI system is also “high-risk” if both of the following apply:

- It is either designed to serve as a safety component of a product covered by the Union harmonization legislation in Annex I, or it is itself a product falling under that legislation;

- That product (or, where applicable, the system-as-product itself) must undergo a third-party conformity assessment before being placed on the EU market or put into service.

Bear in mind that these classifications include carve-outs for specific, low-risk AI uses typically where the system has only a minor impact on decision-making.

b. Exemption of an AI system from High-Risk Classification

An AI system may be exempt from being classified as high-risk if it meets at least one of the following conditions:

- The AI system is developed to execute a strictly limited, procedural task

- The AI system is designed solely to enhance or verify the outcome of a previously completed human task

- The AI system is built to identify patterns or deviations in human decision-making and is explicitly not intended to replace or substantially influence prior human review processes

- The AI system performs exclusively preparatory tasks supporting assessments related to use cases specified in Annex III of the EU AI Act.

Reading the EU AI Act may be overwhelming. Talk to our AI Act Checker to establish whether your AI system is high-risk.

c. High-level overview of the obligations of providers of High-Risk systems

Here are some of the core obligations placed on providers of high-risk AI systems under the EU AI Act:

Pre-market conformity assessment

Before placing a high-risk AI system on the EU market or putting it into service, you must demonstrate compliance with the Act’s mandatory requirements via an appropriate conformity assessment (internal or third-party).

Risk and quality management systems

You must establish and maintain documented frameworks for identifying, evaluating and mitigating risks throughout the AI system’s lifecycle, and integrate these into a formal quality management system.

Data governance

Ensure that training, validation and test datasets are relevant, representative, free of bias and subject to ongoing quality control.

Technical documentation & record-keeping

Keep detailed documentation of system design, development processes, data sources, performance metrics and decisions made during risk assessments, so that national authorities can verify compliance.

Transparency & user information

Provide clear instructions for use, label outputs (e.g. “This result was generated by an AI system”) and supply any information necessary for deployers to understand system capabilities and limitations.

Human oversight

Design and implement measures (e.g. stop buttons, override mechanisms) allowing human operators to intervene in or deactivate the AI system when necessary.

Accuracy, robustness & cybersecurity

Build systems to achieve a high level of accuracy and resilience against manipulation or attacks, and implement safeguards against unauthorized access, data corruption or other cybersecurity threats.

Registration & post-market monitoring

Register each high-risk AI system (and, for public-sector uses, the deploying entity) in the EU database before deployment. Maintain ongoing surveillance of real-world performance, report serious incidents or breaches, and cooperate with market surveillance authorities.

Our AI Act Checker may help you identify the relevant Articles in the EU AI Act with direct links to the text.

d. High-level overview of the obligations of deployers of High-Risk AI systems

The obligations of deployers of high-risk complement and differ from providers’ duties. While providers focus on design-phase requirements (e.g., risk-management systems, data governance, technical documentation, conformity assessments and post-market monitoring), deployers, by contrast, are responsible for operational compliance. This includes using the system correctly, supervising it in practice, and feeding back performance data and incidents to providers and regulators.

Here are some of the core obligations placed on providers of high-risk AI systems under the EU AI Act:

Use as instructed

Adhere strictly to all technical and organizational guidelines provided by the system’s developer.

Assign competent human oversight

Appoint qualified staff with the necessary training and authority to supervise the AI’s functioning and step in when required.

Validate input data

Ensure that any data you supply to the system is appropriate, accurate, and reflective of its intended use case.

Monitor operation and report issues

Keep a close watch on the AI’s performance; if you detect potential hazards or serious malfunctions, halt its use immediately and inform both the provider (or distributor/importer) and the designated market surveillance authority.

Retain system logs

Maintain all automatically generated logs under your control for a minimum of six months (or longer if mandated by EU or national law).

Inform employees and representatives

Prior to implementation in the workplace, notify affected staff members and their representatives in accordance with relevant labor-law procedures.

Register when required

Confirm that any high-risk AI you deploy is recorded in the EU database; if it isn’t, you must refrain from using it and alert the provider or distributor.

Support data-protection impact assessments

Use the transparency information supplied by the provider to complete any required GDPR Data Protection Impact Assessment.

Comply with specialized law-enforcement rules

For post-remote biometric identification, secure timely judicial or administrative authorization, log each use, and restrict processing solely to the purposes approved.

Notify data subjects

When decisions affecting individuals are made or assisted by the AI, inform those individuals that a high-risk system is in use.

Cooperate with authorities

Provide requested information or implement corrective measures at the behest of market surveillance or data protection authorities.

Providers of GPAI

Here is a streamlined overview of the main responsibilities of providers of general-purpose AI models under the EU AI Act:

Comprehensive Documentation

Keep detailed records of your model’s design, training processes, evaluation outcomes and safety checks, and make these available to any authorities upon request.

Transparency to Integrators

Ensure that downstream developers receive all necessary technical details—architecture descriptions, performance metrics and known limitations—so they can deploy the model compliantly.

Data and IP Compliance

Establish and enforce policies to respect copyright and data-protection rules for any third-party material used in model development or fine-tuning.

Training-Data Disclosure

Publish a clear summary of the types and sources of data used to train your model, enabling users to assess potential biases or gaps.

Change-Notification

Notify the designated oversight body whenever you make significant updates, such as altering the model’s intended purpose or performing large-scale retraining, that could affect its risk profile.

Regulatory Cooperation

Respond promptly to any inquiries from EU or national authorities, supplying additional information or adjustments as needed during supervision or investigations.

Fully open-source models with all parameters and usage details publicly available are generally exempt from these requirements unless they pose a systemic risk.

What are GPAIs with Systemic Risk?

Under the EU AI Act, General-Purpose AI (GPAI) systems are versatile AI models capable of a broad range of tasks without being specifically designed for one particular application (for example, foundation models like ChatGPT, GPT-4, or large multimodal AI models).

A GPAI with systemic risk refers to a subset of these general-purpose models that pose significant potential threats at a societal level, such as:

- Undermining democracy (through disinformation or manipulation)

- Violating fundamental rights (privacy, equality, non-discrimination)

- Affecting public health, safety, or the environment on a large scale

- Enabling harmful or malicious activities due to their broad capabilities and wide adoption.

Essentially, these are high-impact AI models whose misuse or malfunction could cause substantial societal harm or disruption.

Providers of GPAIs with systemic risks have obligations beyond those applicable to general-purpose AI models without such systemic risks. These additional obligations are stipulated in Article 52a of the EU AI Act.

EU AI Act penalties

Penalties for non-compliance with the EU AI Act take effect upon its entry into force on August 1, 2024, but are phased in over two years, with full enforcement by August 2026.

- Prohibited AI practices (e.g., social scoring): fines of up to €35 million or 7 % of global annual turnover, whichever is higher.

- Breaches of general and high/limited-risk requirements (e.g., data quality, transparency, human oversight): up to €15 million or 3 % of global turnover, whichever is higher.

- False or misleading information to competent authorities: up to €7.5 million or 1.5 % of global turnover, whichever is higher.

- SME proportionality: SMEs still face the same caps, but pay the lower monetary amount or percentage (e.g., if 3 % of turnover is €150 000, that is the fine rather than the €15 million cap).

- EU institutions and bodies: reduced penalties of up to €1.5 million for prohibited AI breaches and up to €750 000 for other compliance failures

- General-Purpose AI (GPAI) providers: subject to fines of up to €15 million or 3 % of turnover for non-compliance with their specific obligations.

Overall, this tiered system modelled on GDPR but with steeper caps underscores the EU’s commitment to enforcing safe, transparent, and accountable AI by August 2026.

For a more detailed breakdown of penalty provisions, see the consequences of non-compliance.

Penalties are not the sole motivation for complying with the EU AI Act; adherence also offers substantial strategic and operational advantages. Compliance enhances corporate credibility by demonstrating a clear commitment to ethical practices, transparency, and responsible AI deployment.

It can also serve as a differentiator in the market, positioning the organization as a trustworthy and forward-thinking leader. Additionally, proactively incorporating transparency measures, human oversight, and comprehensive documentation from the outset helps to avoid costly system redesigns, legal liabilities, and reputational damage, resulting in long-term cost savings that exceed the potential financial penalties.

EU AI Act Compliance Oversight

To ensure that high-risk AI systems meet strict standards and remain compliant once in the market, the EU AI Act appoints dedicated bodies for conformity assessment and ongoing surveillance.

Notified Body (Conformity Assessment Body)

A Notified Body is an independent organization officially designated by an EU Member State. Its role is to verify that high-risk AI systems comply with the EU AI Act’s strict requirements. Before a high-risk system can display the CE marking and enter the market, the Notified Body reviews its technical documentation, audits the provider’s risk management processes, tests the system against applicable standards and issues a conformity certificate. It also conducts regular checks and audits to ensure that, once in use, the AI system continues to meet safety, transparency and accountability requirements.

Market Surveillance Authority

Each Member State appoints a Market Surveillance Authority to oversee AI systems already available or in use within its territory. These national regulators have the power to inspect products, request documentation and investigate serious incidents or cases of non-compliance. If an AI system is found to violate the Act, whether through unsafe operation, missing documentation or prohibited functions, the Market Surveillance Authority can require corrective measures such as recalling the system, withdrawing it from the market or imposing fines and penalties. Their work ensures that all AI systems in the EU remain safe and lawful.

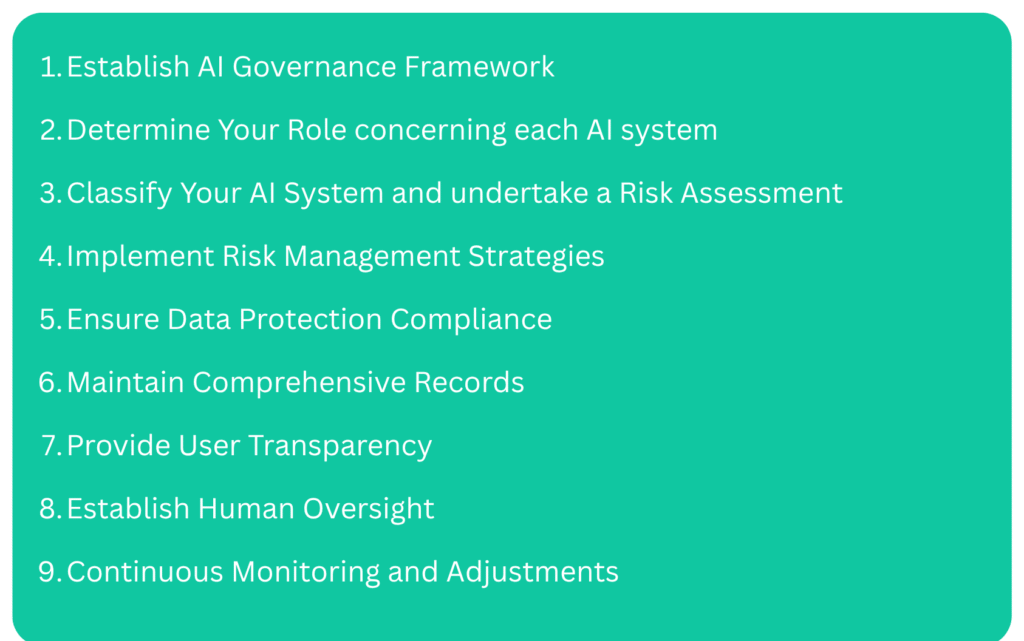

How can organizations comply with the EU AI Act?

Your specific obligations under the EU AI Act will depend on your organization’s role in the AI value chain and the classification of the AI systems you manage. Nevertheless, there is a consistent set of best practices that organizations can adopt to support compliance across the entire AI system lifecycle. The following provides a high-level overview of the key steps that should be implemented to align with the Act’s requirements and ensure responsible, legally compliant AI operations.

Before your organization develops or deploys AI systems, it should establish a comprehensive yet adaptable governance framework aligned with compliance obligations and responsible AI objectives.

Essential elements of this framework include:

- Clearly assigned employee roles and responsibilities to ensure effective oversight and accountability

- Drafting and publishing an AI policy based on recognized ethical standards to guide responsible AI decisions and deployments

- Implementing robust procedures for continuous monitoring, evaluation, and reporting throughout each AI system’s lifecycle

- Embedding human oversight at all stages of AI design and operation.

Step 2: Determine Your Role concerning each AI system

Clarify whether you are:

- A provider of an AI system, a provider of a general-purpose AI (GPAI) system, or a provider of GPAI systems identified with systemic risks

- A deployer of an AI system

- Distributor, importer, or authorized representative.

Determine your role for each of your AI systems with our AI Act Checker.

Step 3: Classify Your AI System and undertake a Risk Assessment

Clearly define and document the intended purposes, contexts, and uses of each AI system your organization handles. Based on these defined use cases, classify each AI system according to the EU AI Act’s categories:

- Prohibited Risk

- High-risk/Low risk/Minimal risk

- General-purpose (GPAI)

- GPAI with systemic risk.

Once AI systems have been classified, organizations should conduct detailed risk assessments for each system and its intended use cases. Prohibited AI systems must be identified and eliminated from operation. As part of the assessment process, organizations should identify potential risks, including bias, discrimination, privacy violations, safety concerns, and environmental impact.

It is also essential to review and apply any specific transparency obligations associated with each classification. Finally, clear and accessible information must be prepared to inform users and downstream stakeholders about the AI system’s functions, limitations, and potential risks.

Step 4: Implement Risk Management Strategies

Organizations should develop and document concrete plans and measures to mitigate the risks identified during the assessment phase. These mitigation strategies must be tailored to the specific nature and context of each AI system.

For example, in the case of recruitment software with potential bias, appropriate measures may include implementing debiasing techniques, ensuring human oversight in decision-making, conducting regular audits, and establishing clear procedures for handling complaints.

Step 5: Ensure Data Protection Compliance

Align your AI practices with applicable data protection laws (e.g., GDPR) by implementing robust technical and organizational safeguards, clearly defining and documenting your legal basis for processing personal data, performing Data Protection Impact Assessments (DPIAs) where required, and establishing mechanisms for meaningful human review, especially in scenarios involving automated decision-making.

Step 6: Maintain Comprehensive Records

Organizations should create detailed technical documentation and maintain comprehensive records covering all aspects of their AI systems. This includes information on system architecture, datasets used, results of risk assessments, implemented mitigation measures, and ongoing performance monitoring. Proper documentation supports transparency, accountability, and regulatory compliance, while also facilitating internal reviews and external audits.

Take the Fastest Path to

Audit-Ready Compliance

Build trust, stay on top of your game and comply at a fraction of a cost

Step 7: Provide User Transparency

Organizations must clearly communicate to users how their AI systems function, including the systems’ capabilities and any associated risks. This involves providing user guidance and disclosures that are both easily understandable and readily accessible, ensuring that individuals interacting with the AI are well-informed and able to use the technology responsibly and effectively.

Step 8: Establish Human Oversight

- Implement processes ensuring appropriate human involvement, particularly for high-risk AI systems.

- Guarantee human capability to intervene, supervise, and explain critical decisions or actions taken by AI systems.

Step 9: Continuous Monitoring and Adjustments

- After deployment, continuously monitor and evaluate AI system performance

- Adjust processes, update risk assessments, and refine mitigation measures regularly to maintain compliance.

How can Whisperly support your AI governance and compliance journey?

Whisperly AI Governance Solution

Managing AI governance and regulatory compliance is often complex. To support your organization in keeping pace with evolving AI laws such as the EU AI Act, Whisperly Library offers:

- AI Compliance Knowledge Center

- AI Registry with AI-related risks and best practices for risk mitigation

- Insights into dozens of AI models

- Synchronization with your Data Privacy governance.

Whisperly Ai Governance Consulting

For organizations in need of thorough guidance, our team of governance professionals offers tailored solutions to create and execute AI governance frameworks that reflect your unique context and industry requirements. Leveraging our governance-as-a-service model, you’ll receive strategic support to ensure your AI efforts are responsibly and effectively governed from the outset.