Content

1. Introduction: From Data Protection to Responsible Innovation

Innovation no longer happens in isolation because every new digital solution, AI tool, or data-driven platform is built on information about people. And with that comes responsibility.

As technologies evolve, organizations are expected not only to innovate, but to innovate ethically by ensuring that their products and systems respect individuals’ privacy and fundamental rights from the very start. This expectation is no longer optional — it is now deeply embedded in European data protection law and the way regulators evaluate compliance.

That is where the Data Protection Impact Assessment (DPIA) comes in.

Introduced by the GDPR, a DPIA serves as a structured process that allows organizations to identify, understand, and mitigate privacy risks before they escalate into regulatory or reputational issues. Rather than being a purely legal requirement, it is a practical framework for responsible innovation — one that transforms privacy from a compliance checkbox into a design principle.

Today, DPIAs have become increasingly relevant for companies deploying AI models, digital transformation strategies, and advanced cybersecurity systems. These technologies can bring immense value, but they also process data in complex ways that may expose individuals to risks such as profiling, discrimination, or loss of control over personal information.

Through DPIAs, organizations can achieve what regulators and users increasingly demand: innovation that is both responsible and trustworthy.

2. What Is a DPIA (and Why It Matters)?

At its core, a Data DPIA is both a compliance requirement and a decision-making tool. It was introduced by the General Data Protection Regulation (GDPR) to help organizations identify and mitigate risks to individuals’ rights before they occur — not after a data incident has already happened.

Unlike traditional audits that look back at what went wrong, a DPIA is a forward-looking process. It encourages companies to assess how personal data will be used, who will have access to it, and whether the planned processing is necessary, proportionate, and fair. In that sense, a DPIA embodies the GDPR’s risk-based approach: it requires organizations to focus on the level of risk their activities create for people and to adjust their safeguards accordingly.

But a DPIA is not just a legal checklist. It is also a practical accountability framework that demonstrates how an organization integrates privacy into its daily operations.

Through a DPIA, businesses can document the reasoning behind their decisions, prove compliance to regulators, and, equally important, show customers that their personal information is handled with care. This transparency has become one of the most effective ways to build and maintain user trust in the digital environment.

The DPIA process is also closely linked to the principles of privacy by design and privacy by default — two cornerstones of modern data protection.

- Privacy by design means integrating privacy safeguards directly into the architecture of a product or service, instead of treating them as an afterthought

- Privacy by default ensures that the most privacy-protective settings are automatically applied unless the user actively decides otherwise.

A well-executed DPIA turns these principles into practice, offering a structured way to embed privacy into every technical and organizational layer of data processing.

Ultimately, it transforms compliance from a defensive obligation into an active governance strategy — one that helps organizations anticipate privacy risks, align innovation with legal and ethical standards, and demonstrate that protecting data is part of how they earn and sustain trust.

3. When Is a DPIA Required?

Not every data-processing activity requires a DPIA, but some certainly do.

Under the GDPR, organizations are legally required to conduct a DPIA whenever a planned processing operation is likely to result in a high risk to individuals’ rights and freedoms.

In other words, a DPIA is mandatory when there is a real possibility that the way personal data is used could significantly affect people. For example, by influencing decisions about them, revealing sensitive information, or tracking their behavior in public or digital spaces.

The law highlights three situations where a DPIA must always be carried out:

- When organizations rely on automated decision-making or profiling, such as algorithms that evaluate credit scores, job applications, or customer behavior

- When they engage in large-scale processing of special-category data, such as health, genetic, or biometric data

- When they perform systematic monitoring of publicly accessible areas, like city-wide CCTV networks or smart-city analytics systems.

These are the most typical high-risk cases, but they are not the only ones. The European Data Protection Board (EDPB) has identified additional indicators that help determine when a DPIA is needed — for example, when data from multiple sources is combined, when vulnerable individuals (like children or patients) are involved, or when innovative technologies such as artificial intelligence are used.

If two or more of these factors are present, a DPIA should almost always be performed.

To put this into perspective: an AI-powered hiring platform that profiles candidates to predict job performance would clearly trigger a DPIA, as it combines automated decision-making with potentially significant consequences for the individual. The same applies to a healthcare application processing sensitive medical data from thousands of patients, or a company deploying smart surveillance systems in public areas.

Each of these scenarios involves high risks that must be assessed and mitigated before any processing begins.

For readers interested in how personal data is handled throughout the development and deployment of AI systems, Whisperly’s article Use of Personal Data in AI Development and Deployment provides a closer look at how these risks arise in practice and how organizations can address them through responsible design and compliance measures.

That said, not every processing operation calls for a DPIA. Routine, low-risk activities, like maintaining an employee contact database or sending ordinary service emails, typically fall outside this obligation. Similarly, if a DPIA has already been performed for a similar operation and the risks remain the same, it can often be reused with minor adjustments.

Still, many privacy-mature organizations choose to carry out DPIAs even when not strictly required. Doing so helps them stay proactive, especially when adopting new technologies or expanding into new data uses. It signals to both users and regulators that privacy risk management is an integral part of their innovation culture.

4. How Is a DPIA Conducted in Practice

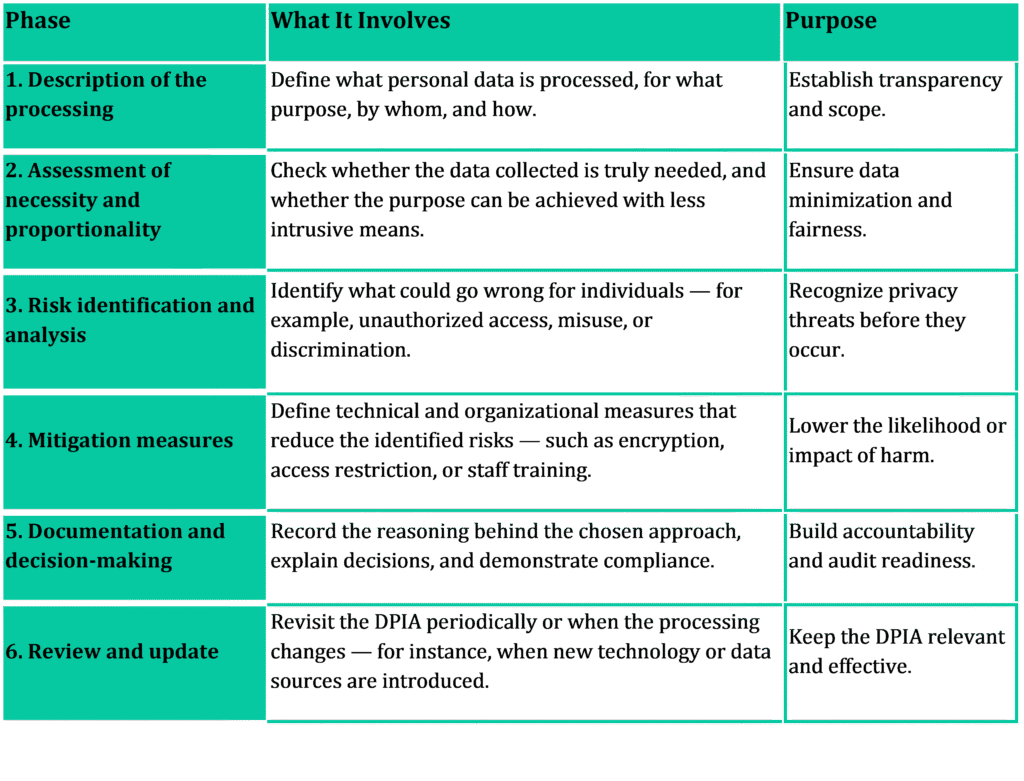

A DPIA may sound like a complex compliance exercise, but in practice, it follows a clear and logical sequence. It is designed to guide organizations through six essential steps — from understanding what data they process, to documenting how they protect it.

In other words, a DPIA is not a one-off task to be checked off a list. It’s a structured process that evolves alongside each project — ensuring that privacy remains a continuous consideration rather than a formality.

When organizations approach these steps thoughtfully, a DPIA becomes a powerful management tool rather than a regulatory burden.

For example, consider a company developing a mobile app with biometric login.

- During its DPIA, the team would describe how facial recognition or fingerprint data is processed, assess whether this feature is necessary, identify risks such as unauthorized access or misuse of biometric identifiers, and introduce safeguards like local storage, encryption, and user consent.

These are exactly the kinds of cybersecurity and data protection measures that form the bridge between privacy compliance and technical resilience.

Finally, it is important to remember that a DPIA is a living document.

It should be revisited whenever technologies evolve, systems are updated, or new data uses are introduced. Treating the DPIA as an ongoing process — not a one-time report — ensures that privacy remains embedded throughout the project lifecycle, reflecting the organization’s long-term commitment to accountability and trust.

5. Common Mistakes and Lessons from Practice

While DPIAs have become a cornerstone of GDPR compliance, organizations often make the same mistakes, usually not out of negligence, but out of misunderstanding what a DPIA is really meant to achieve.

1. One of the most common pitfalls is starting the DPIA too late — after the project has already gone live.

At that point, risks have already materialized, systems are in production, and any “fix” becomes more expensive and less effective. The purpose of a DPIA is exactly the opposite — to identify and manage privacy risks before they affect users or the business. As privacy experts often say: a DPIA done too late is not a DPIA — it’s damage control.

2. Another frequent mistake is treating the DPIA as paperwork rather than a real analytical process.

Some teams fill out templates just to “tick the compliance box,” without truly evaluating whether the data they collect is necessary or how it might affect individuals. A well-executed DPIA should feel more like a strategic workshop than a form — it should bring together the people who know the system best.

3. And that leads to the next big lesson: collaboration matters.

A meaningful DPIA cannot be carried out by the legal team alone. It requires input from IT, security, product, and data science teams. Imagine a scenario where the legal department drafts a DPIA, but never checks how the AI model actually processes data — or where the engineering team deploys new analytics features without consulting legal about user consent. Both teams are acting in good faith, yet the outcome is non-compliant.

4. Finally, many organizations confuse cybersecurity with data protection.

They are related but not the same: cybersecurity protects systems and data from external threats, while a DPIA focuses on the impact of data processing on people’s privacy and rights. Encryption and access control can prevent a data breach, but they cannot answer whether it was fair or lawful to collect that data in the first place. In practice, both are essential. Strong cybersecurity measures support data protection, and a thorough DPIA ensures those measures are used for the right purpose — to protect individuals, not just systems.

The main takeaway?

A DPIA done right improves both compliance and product reliability. It helps teams build solutions that are secure, lawful, and trusted — by design.

6. DPIA, AI Systems, and Cybersecurity: The Intersection of Risk and Responsibility

Artificial intelligence brings extraordinary potential and equally extraordinary responsibility. Under the GDPR, most AI systems qualify as high-risk processing, since they involve automated decision-making, profiling, or large-scale analysis of personal data. This is why a DPIA is not just advisable for AI projects — it’s essential.

A DPIA helps organizations understand what an AI system actually does:

- What data it relies on

- How it reaches conclusions

- What impact those conclusions may have on individuals

- Where bias or misinformation, such as unfair or inaccurate outputs, could emerge.

When those questions remain unanswered, the consequences can be serious.

One recent example came from Australia, where Deloitte reportedly used generative AI to assist in drafting a government report, which included fabricated citations and even a synthetic court judgment, leading to a partial refund of the fee. Shortly afterwards, a similar situation emerged in Canada, where a Deloitte-prepared healthcare report worth nearly 1.6 million CAD contained multiple AI-related citation errors, including references to studies that do not exist. Deloitte said it would revise the report and correct the problematic citations, illustrating how AI-assisted outputs, without proper verification and risk assessment, can compromise the credibility of high-stakes deliverables.

While this case did not involve a GDPR breach, it perfectly illustrates what can happen when AI tools are introduced into sensitive workflows without a clear accountability process or a proper DPIA in place.

Similar challenges appear in many AI-driven systems.

For example:

- AI chatbots and emotion-recognition tools can inadvertently profile users based on tone, language, or facial expressions, raising complex questions about fairness and consent

- Cybersecurity platforms performing real-time behavioral monitoring may analyze employee or user behavior patterns to detect anomalies, but if poorly configured, such systems can easily cross the line into intrusive surveillance.

Each of these technologies processes data in ways that can directly affect individuals’ rights, and that’s exactly where the DPIA becomes indispensable.

A well-designed DPIA could have revealed these risks early — whether the system produces unverifiable outputs, relies on uncontrolled datasets, or monitors individuals too closely. The goal of an AI DPIA is precisely that: to identify and mitigate such risks before they escalate into public or organizational crises.

At a regulatory level, the DPIA also acts as a foundation for the AI Act’s upcoming Fundamental Rights Impact Assessment (FRIA) – a new requirement that extends risk assessment beyond data protection to include broader human-rights considerations.

Under Article 27(4) of the AI Act, where a DPIA has already been conducted under the GDPR, the FRIA will complement rather than duplicate it. This provision ensures that both frameworks work together, creating a unified approach to accountability and responsible AI governance. Although the AI Act has already entered into force, these FRIA obligations will take effect in August 2026, providing organizations with time to prepare and align their DPIA processes with the upcoming requirements.

Cybersecurity plays a central role in this balance. Encryption, access control, audit trails, and dataset integrity checks are critical components of DPIA mitigation measures. They protect the infrastructure on which privacy and accountability depend.

And as organizations mature, many are turning to automated DPIA tools to streamline the process. Privacy compliance platforms such as Whisperly enable teams to manage DPIAs more efficiently — integrating privacy risk assessment directly into product design, AI development, and daily operations.

7. Useful Insights and Best Practices

A DPIA delivers real value only when it becomes part of an organization’s culture — not a one-time legal checklist. The most successful teams treat the process as a living, collaborative effort that evolves with each new technology or data use.

Below are several practical lessons drawn from real-world experience and recognized standards.

1. Involve the DPO early in the project

Engaging the Data Protection Officer (DPO) at the concept stage helps identify potential privacy risks before development begins. When the DPO works closely with designers, developers, and product managers, privacy becomes part of the product’s DNA — not an afterthought added to meet legal requirements.

2. Encourage cross-department collaboration

A DPIA works best when legal, IT, and cybersecurity teams collaborate from the start. Lawyers bring regulatory understanding, engineers provide technical insights, and security professionals ensure that systems remain protected. When these perspectives converge, compliance and functionality reinforce each other naturally.

3. Keep methodologies consistent

Use recognized international standards, such as ISO 31000 (Risk Management) and ISO/IEC 29134 (Privacy Impact Assessment Guidelines), to maintain a structured approach. These frameworks ensure that each DPIA is systematic, measurable, and comparable, regardless of the project or industry.

4. Reuse and regularly update DPIAs

If your organization conducts similar processing operations, adapt existing DPIAs instead of starting from scratch. Schedule periodic reviews, at least annually, or whenever significant changes occur, to ensure that findings remain relevant and accurate.

5. Document every decision

Good documentation is more than formality. It proves accountability — showing regulators, partners, and users that privacy was considered at every stage.

From defining the legal basis to implementing mitigation measures, every choice should be recorded and justified.

6. Consider publishing DPIA summaries

While not legally required, publishing short summaries of completed DPIAs promotes transparency and builds trust. It demonstrates that your organization not only complies with GDPR but also values open communication with users — turning privacy into a visible mark of credibility.

Ultimately, these practices transform the DPIA from a compliance obligation into a governance tool — one that strengthens trust, enhances resilience, and supports responsible innovation.

8. Conclusion: DPIA as a Bridge Between Technology and Trust

A well-conducted DPIA shows that privacy is not a barrier to innovation — it’s what makes innovation sustainable.

When privacy risks are identified early and managed thoughtfully, technology can evolve responsibly, earning the trust of users, regulators, and society as a whole. In this sense, the DPIA acts as a bridge between legal compliance and ethical awareness. It transforms regulatory obligations into strategic value, ensuring that every new system, product, or AI solution respects both the law and the people it serves.

A mature approach to data protection demonstrates that compliance and competitiveness are not opposites, but allies. Organizations that understand this balance are not just meeting legal standards — they’re building digital products that users can rely on. Ultimately, a properly conducted DPIA means more than just a checkbox: it represents legal compliance, ethical responsibility, and business credibility in one unified process.

Responsible innovation begins with understanding privacy risks and embedding accountability into every stage of data processing.

Take the Fastest Path to

Audit-Ready Compliance

Build trust, stay on top of your game and comply at a fraction of a cost