The European Union has made a clear statement with the adoption of the AI Act: artificial intelligence is too important, and too risky, to be left without dedicated oversight inside organizations. To ensure accountability, the regulation encourages a new compliance role – the AI Officer. Sometimes styled as a Chief AI Officer, Artificial Intelligence Officer, or Chief Artificial Intelligence Officer, this position is not strictly mandatory under the Act but is highlighted as a best practice for organizations that develop or deploy AI systems. It is expected to become a cornerstone of corporate governance wherever companies want to demonstrate robust AI compliance.

The idea is straightforward but ambitious. Just as data protection became a board-level issue after GDPR, AI governance is now set to take its place at the center of strategic decision-making. The AI Officer will be responsible for guiding this process: monitoring how AI systems are designed, tested, documented, and used; ensuring that risks are identified and mitigated; and serving as the main contact point for regulators and auditors.

This is more than a technical role. It is a sign that the EU sees trustworthy AI not only as a matter of engineering but also of organizational culture, risk management, and legal compliance. For businesses, although the appointment of an AI Officer is not a strict legal obligation, it is a strategic choice – both a way to prepare for compliance expectations and an opportunity to build trust with clients, partners, and the public.

Later in this text, we will reflect on how this new function compares to the Data Protection Officer (DPO) under the GDPR – a role that reshaped compliance strategies across Europe. But first, we will focus on the AI Officer itself: its legal basis, its mandate, and the challenges companies will face in making this role effective.

Content

AI Officer: A Regulatory Mandate for Trustworthy AI

The recommendation to designate an AI Officer arises from the EU AI Act’s framework for artificial intelligence systems.

Under the Act, providers of AI systems – those who develop and place such systems on the market – are strongly encouraged to appoint an AI Officer. In practice, this recommendation is particularly relevant for deployers of high-risk systems, where the use of AI may create significant risks for fundamental rights, health, or safety. The regulatory logic is clear: where AI has the potential to impact people’s lives in critical ways, organizations are advised to have a dedicated professional ensuring compliance with the Act’s requirements, even though the law does not impose this as a strict obligation.

Examples of these areas include:

- Employment

- Education

- Healthcare

- Essential services.

This role is anchored in the same policy rationale that guided the creation of other EU-mandated officers: compliance cannot be left to chance or distributed informally across departments. The AI Act reflects the view that trustworthy AI demands centralized oversight, independence, and accountability within the organization. By embedding the AI Officer into the governance structure, the EU aims to ensure that compliance is not treated as a one-off box-ticking exercise, but as an ongoing responsibility throughout the entire lifecycle of AI systems.

From Risk to Oversight: The Core Responsibilities of the AI Officer

The AI Officer is envisioned as the guardian of an organization’s compliance with the EU AI Act. Their mandate stretches across the entire lifecycle of AI systems, with a focus on risk management, accountability, and transparent oversight.

- One of the central duties is to oversee conformity assessments. Before AI systems can be placed on the market or put into service, providers must demonstrate that their systems meet the Act’s strict requirements. The AI Officer ensures that these assessments are not only properly conducted but also embedded into internal processes, so compliance is maintained beyond the initial launch.

Alongside conformity assessments, the Officer supervises the risk management framework: identifying, evaluating, and mitigating risks that could affect health, safety, or fundamental rights.

- Another key responsibility is maintaining and reviewing technical documentation and transparency measures. The AI Act places heavy emphasis on accurate records, traceability, and clear instructions for use. The AI Officer makes sure that documentation is up to date, accessible to regulators, and sufficiently detailed to demonstrate compliance. This role also extends to ongoing monitoring of deployed systems – tracking performance, recording incidents, and ensuring corrective actions when risks emerge.

- Finally, where appointed, the AI Officer can act as a connection with market surveillance authorities and notified bodies. Much like a corporate bridge between the company and regulators, the Officer would be responsible for responding to information requests, facilitating audits, and ensuring open communication. Despite the fact that this role is not mandatory under the AI Act, organizations that choose to establish it position the AI Officer as both an internal watchdog and an external contact point, placing them at the center of compliance operations and regulatory trust-building.

Placing the AI Officer within the Company

The AI Officer is not intended to be a symbolic appointment but a structurally significant role within the company. The EU AI Act emphasizes that compliance oversight must be effective, which means the Officer’s place in the organizational chart matters.

First, the role must be exercised with a degree of independence. Similar to how the GDPR shields the Data Protection Officer from direct influence, the AI Act requires that the AI Officer can perform their duties without interference or pressure from management. This does not mean the Officer operates in isolation, but rather their judgment on compliance issues must remain free from conflicts of interest.

For instance, an AI Officer responsible for ensuring that a recruitment algorithm is fair and non-discriminatory should not also be the head of HR pushing for faster, cheaper hiring solutions. The pressure to prioritize efficiency could easily conflict with the obligation to flag bias or demand costly adjustments.

Second, the organization must allocate adequate resources. Independence without resources is empty: the AI Officer needs access to technical expertise, legal support, testing infrastructure, and sufficient staff to oversee compliance activities.

The Act deliberately leaves “adequate” open to interpretation, signaling that the depth of support will vary depending on the size of the company and the scope of its AI operations.

Finally, the role is designed to have direct access to senior management. This ensures that compliance concerns are not lost in middle management layers but are instead brought directly to those who carry ultimate responsibility.

In this sense, the AI Officer becomes part of the company’s strategic governance structure, i.e., an embedded compliance leader whose work is both operational and advisory.

The Rare Skillset Behind the AI Officer Role

The AI Officer role is unique because it overlaps with law, technology, and ethics. Unlike positions that are narrowly legal or purely technical, this role demands a hybrid skillset, and that makes defining the ideal profile both exciting and challenging.

a) On one side, the Officer must understand the legal and regulatory framework of the AI Act:

- Risk management obligations

- Conformity assessments

- Transparency duties

- Supervisory authority interactions.

This requires not only legal literacy but also the ability to translate legal requirements into internal processes and corporate governance practices.

b) On the other hand, the role demands genuine technical competence. The Officer must be able to grasp how AI models are trained, validated, and monitored, and to communicate effectively with engineers and data scientists. While they do not need to be coding algorithms themselves, they must be fluent enough in technology to spot compliance risks and ask the right questions.

c) Ethical awareness adds a third dimension. The AI Act is designed not only to prevent technical failures but also to safeguard fundamental rights – such as non-discrimination, fairness, and transparency. The AI Officer, therefore, needs to be comfortable assessing not just whether a system works, but whether it aligns with broader principles of trustworthy AI.

Because such a multi-disciplinary profile is rare, organizations may need to combine backgrounds: lawyers who understand technology, engineers with compliance experience, or professionals trained specifically in AI governance. Over time, the market is likely to see the rise of a new career path: compliance experts with a distinct specialization as AI Officers, sometimes even styled as Chief AI Officers to highlight their leadership role in digital governance.

Practical Challenges

While the AI Officer has a forward-looking role, its real-world implementation will be anything but straightforward. Companies preparing to appoint one should anticipate a set of practical obstacles that go beyond simply naming someone to the position.

- One major challenge is defining what counts as “adequate resources.” The AI Act deliberately leaves this standard open, recognizing that compliance needs differ between a multinational deploying multiple high-risk AI systems and a smaller provider working on a single product. But the lack of precision may create uncertainty: how much budget is enough, how many staff should support the Officer, and how deep should testing infrastructure go? Until practice and guidance from regulators emerge, organizations will need to make judgment calls.

- Another issue is the talent gap. The market already struggles to find professionals who combine legal, regulatory, and technical expertise. The AI Officer role, by design, requires all three. Companies may need to build internal training programs, rely on external consultants, or consider shared or outsourced Officer models – at least in the early years of the AI Act’s application.

- There is also the question of role overlap. In many organizations, compliance responsibilities already sit with Chief Compliance Officers, Chief Information Security Officers, or even Ethics Committees. The AI Officer’s duties may cut across these existing functions, creating uncertainty about reporting lines and accountability. Without careful structuring, there is a risk of duplication, internal conflict, or, conversely, gaps where no one takes ownership.

- Finally, cultural integration will matter. For the AI Officer to be effective, they must be more than a box-ticking appointment. They need recognition within the company as a strategic figure, not just a compliance bottleneck. This requires top-level buy-in, open communication with technical teams, and embedding AI governance into the organization’s everyday workflows.

DPO and AI Officer: Why They Often Get Compared

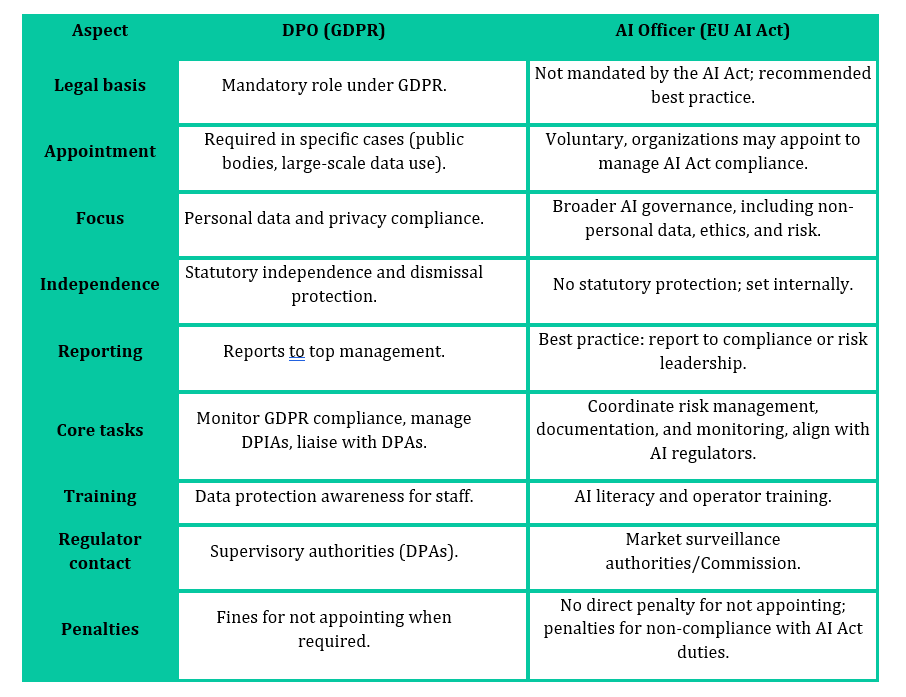

In conversations about AI governance under the EU, the Data Protection Officer (DPO) under GDPR and the emerging AI Officer under the AI Act are frequently placed side by side. This isn’t surprising, as both roles are internal, officer-driven mechanisms created by EU legislation to manage compliance, accountability, and regulatory relationships.

The similarities arise from shared regulatory philosophies:

- Both roles demand independence, adequate resourcing, and direct access to senior management

- They both act as a liaison with regulators – DPOs with data protection authorities and AI Officers with market surveillance authorities or notified bodies.

Yet, there are important differences: primarily, the DPO is a mandatory role focused on protecting personal data and privacy rights, whereas the AI Officer is a recommended but not obligatory position under the AI Act. Its domain extends to the ethical, safety, and operational dimensions of AI systems, many of which may not involve personal data at all.

The question naturally arises: could a single individual serve as both DPO and AI Officer? In smaller organizations, particularly startups with limited compliance budgets, combining roles may seem attractive. There are clear efficiencies, such as shared knowledge of regulation, streamlined reporting, and reduced overhead.

Yet the risks of overload and conflicts of interest are real. If AI systems process personal data, the same individual would evaluate compliance from two different regulatory lenses, potentially creating internal contradictions. While a dual-hat model might work in specific contexts, most organizations will benefit from clear separation to preserve focus and independence.

a) Shared Foundations, Diverging Skillsets

Despite differences in scope, the two roles are built on the same structural foundations:

- Independence: both officers must be free from conflicts of interest and able to raise concerns without fear of retaliation.

- Resourcing: the law expects organizations to provide sufficient budget, tools, and staffing for both roles.

- Regulatory liaison: each serves as the bridge between the company and external authorities (DPAs for GDPR; market surveillance authorities and notified bodies for AI).

They also share many core functions:

- Both officers are expected to support risk assessments, help develop and update policies and guidelines, conduct training, manage reporting processes, and act as contact points for regulators. In this sense, the EU has applied a familiar governance model: embedding accountability into a dedicated officer role, backed by resources and a clear mandate.

- Both positions are further anchored in the same guiding principles – fairness, transparency, data quality, and a risk-based approach.

However, the focus and expertise of the two roles diverge sharply.

- The DPO is primarily concerned with personal data. Their work revolves around ensuring GDPR compliance, safeguarding data-subject rights, carrying out Data Protection Impact Assessments (DPIAs), maintaining Records of Processing Activities (ROPAs), and managing issues such as data subject requests and breaches. Their profile is usually grounded in law, compliance, risk management, and IT security.

- The AI Officer, while not a mandatory role under the AI Act but recommended as good practice, must address a much broader field. Their scope of work includes not only data protection when relevant, but also non-personal data, system classification, notifications, technical documentation, model validation, ongoing monitoring, and ethical considerations. In other words, their profile extends beyond compliance into technical literacy and ethical oversight.

The two roles intersect most clearly when AI systems process personal data. In those cases, the DPO and the AI Officer must coordinate closely, ensuring that privacy and AI governance obligations are addressed consistently. Yet even in this overlap, their knowledge bases remain distinct enough that one individual rarely has the capacity to cover both functions comprehensively.

The implication is clear: while the AI Officer shares structural DNA with the DPO, it represents a new compliance profession – one that requires mastering a cross-functional field between law, technology, and ethics.

To make these similarities and differences easier to grasp, the table below sets out a side-by-side comparison of the DPO and the AI Officer:

b) Finding Balance Between AI and Privacy Oversight

Structuring the roles of DPO and AI Officer inside a company requires balance. Smaller organizations may initially opt for one officer with dual responsibilities. Larger organizations, however, will likely benefit from separate appointments, ensuring each role gets the attention it deserves.

The complexity comes when responsibilities intersect, such as an AI system used in HR recruitment that processes sensitive personal data. Here, joint oversight or coordinated reporting is essential. The real challenge for organizations will be avoiding compliance silos: DPOs and AI Officers must share insights without duplicating work or leaving gaps.

Looking forward, there are two possible trajectories.

- On one path, the AI Officer and DPO remain distinct, each maturing into specialized professions with their own expertise and communities of practice

- On another, the roles may begin to converge into a broader “digital compliance officer” model, especially as AI and data protection intertwine in practice.

For now, the prudent approach is to treat them as complementary but separate. Companies should recognize the unique demands of each role while also investing in mechanisms for collaboration. Over time, market practice and regulatory guidance will reveal whether convergence is realistic or whether separation is essential to preserve the independence and clarity of each officer’s mandate.

c) Key Takeways

The introduction of the AI Officer under the EU AI Act marks the rise of a new compliance profession. Much like the DPO under GDPR, this role embeds accountability into the organizational structure, but its scope is far broader, stretching from technical oversight and risk management to ethical safeguards and transparency. Unlike the DPO, however, the AI Officer is not a mandatory appointment under the Act – it is a recommended practice that many organizations are expected to adopt as AI governance matures.

For organizations, three points stand out:

- It’s not a DPO clone. The AI Officer draws inspiration from the DPO model but requires multidisciplinary expertise that blends law, technology, and ethics.

- It needs real authority. Independence, resources, and direct access to senior management will determine whether the role is effective or symbolic.

- It can be a competitive advantage. Companies that take AI governance seriously by empowering their AI Officers are better placed to gain trust from regulators, partners, and the market.

The EU has set the framework, but it will be businesses themselves that define what success looks like. Those who treat the AI Officer as a strategic role rather than a compliance afterthought will not only meet legal obligations but also lead in shaping a market built on trustworthy AI.

Take the Fastest Path to

Audit-Ready Compliance

Build trust, stay on top of your game and comply at a fraction of a cost