Employees across sectors are increasingly utilizing AI tools to enhance efficiency, often informally and without established AI governance frameworks. This pervasive yet largely unregulated use of AI introduces significant operational, ethical, and legal risks that organizations must proactively address.

This is why AI literacy is not only a legal requirement but has become a strategic necessity. It’s not about turning every team member into a technical expert but about equipping every individual with the knowledge and skills to understand, use, and engage with AI responsibly and effectively. It means empowering individuals to make informed decisions, grasp the broader implications of AI technologies, and navigate the ethical challenges they face. For business leaders, especially within startups and SMEs, developing organizational AI literacy is a critical component of operational resilience.

Content

This article provides a practical roadmap to AI literacy in a business context, exploring its core components, growing relevance, and role in addressing ethical and operational risks. Whether you’re a business leader or curious professional, this guide offers clear, actionable steps for using AI across an organization responsibly and effectively.

AI Literacy under the EU AI Act

The European Union’s Artificial Intelligence Act (“AI Act”) is a landmark regulatory instrument with far-reaching implications for businesses involved in the development or deployment of AI systems. At its core, the AI Act is grounded in the principle of human-centered AI, a concept that permeates the entire regulation and must be kept in mind when interpreting individual obligations. This emphasis on human oversight reflects the broader objective of the EU’s AI regulatory framework: to promote human-centered, trustworthy AI that serves the public good and aligns with fundamental human rights.

The AI Act establishes a formal obligation to ensure AI literacy among those involved in the development or use of AI systems. This provision entered into application on 2 February 2025, with supervision and enforcement delegated to national market surveillance authorities, which are to be designated by 2 August 2025.

To fully understand what this obligation entails, let’s break it down:

What is the AI literacy obligation?

Providers and deployers of AI systems should take measures to ensure a sufficient level of AI literacy of their staff and other persons dealing with the operation and use of AI systems on their behalf. They should do so by considering their technical knowledge, experience, education, and training of the staff and other persons, as well as the context the AI systems are to be used in and the persons on whom the AI systems are to be used.

What is the scope of the AI literacy obligation?

The AI Act establishes AI literacy as a legal obligation that applies to a broad range of stakeholders, including providers, deployers, and affected persons. For both providers and deployers, this duty extends not only to their internal staff but also to any “other persons” engaged in the operation or use of AI systems on their behalf. This includes third parties such as contractors, service providers, and other outsourced personnel.

In addition, the obligation encompasses affected persons, that is, individuals who are impacted by AI-driven decisions or operations. These individuals must be given sufficient information to understand how decisions made using AI will impact them.

What does an AI literacy obligation require?

Providers and deployers of AI systems must possess the necessary skills, knowledge, and understanding to make informed and responsible deployments of AI systems. This includes awareness of both the specific AI systems being implemented, and the broader risks, opportunities, and potential harms associated with their use.

AI literacy obligations under the AI Act take two distinct forms: specific literacy (relating to the technical and operational understanding of the AI systems being used or introduced by the organization) and general literacy (relating to broader awareness of AI-related risks, benefits, and potential damages).

Importantly, the AI Act imposes an outcome-based requirement; it is not enough to simply provide access to AI training. Organizations must ensure that individuals genuinely attain the required level of competence. As such, effectiveness should be evaluated using purpose-specific metrics, and to comply, businesses should identify both general and specific AI training needs early on and establish appropriate evaluation criteria to measure the actual impact of AI literacy efforts.

What is the required standard of AI literacy?

Providers and deployers must take appropriate measures to ensure a sufficient level of AI literacy among relevant individuals. While this term introduces a degree of flexibility, it also requires a demonstrable and proactive approach.

Currently, there are no officially defined benchmarks or uniform standards outlining what qualifies as a “sufficient” level of AI to meet this requirement. As a result, organizations are encouraged to take proactive steps, starting with an assessment of existing AI literacy levels, followed by the identification of specific training gaps, and the creation of a structured AI development plan to build relevant competencies. This process should be thoroughly documented, regularly reassessed, and adapted to reflect changes in how AI is used within the organization and the risks it presents. Establishing a clear and traceable AI roadmap will be critical to demonstrating compliance if regulatory review occurs.

AI Literacy in Practice

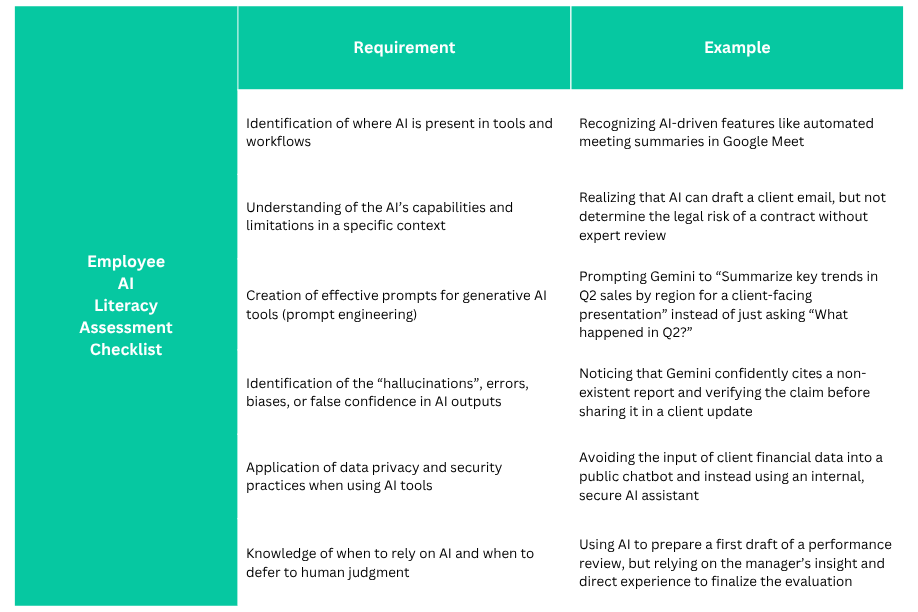

AI literacy refers to the collective capability of a team to grasp fundamental AI principles, use AI tools effectively and responsibly in their daily tasks, critically assess AI-generated results, and stay informed about the ethical considerations and potential risks that AI may pose. Establishing a baseline level of AI literacy ensures that all staff possess the minimum competency needed to engage with AI concepts in public discourse and workplace practice. The aim is not to make every employee an AI specialist, but to enable them to be competent and thoughtful users of AI, equipped with both practical know-how and a solid understanding of how AI systems operate.

A team or individual with AI literacy understands the capabilities and limitations of AI technologies, can assess their outputs with a critical eye, and applies them appropriately within their work context. This also means understanding how AI impacts one’s specific role and contributes to broader organizational goals, while adhering to responsible use principles.

Example: product marketing managers use Gemini, a generative AI tool, to draft initial messaging for a new campaign. They understand that Gemini is a language model trained on large datasets and may occasionally produce inaccurate or biased content. After generating ideas, they carefully review the outputs, verify any factual claims, and revise the text to align with brand tone and compliance standards. They also avoid entering any confidential product information into the Gemini, fully aware of the privacy risks involved. Their use of Gemini reflects not just technical skill, but responsible and informed AI engagement.

AI literacy within an organization involves more than technical know-how; it requires a company-wide mindset that understands AI’s relevance across all roles. It’s not just a concern for IT teams; every employee, including top leadership, should have a foundational understanding of how AI functions and how it affects their work. This begins at the executive level, where informed leadership is essential for driving effective AI integration. Leaders set the tone, and without their engagement, organization-wide adoption is unlikely to succeed.

AI Literacy is a Business Investment

AI tools can drive efficiency and innovation, but only if the organization’s team knows how to use them responsibly. Without proper understanding, misuse can lead to data breaches, flawed decisions, or compliance risks. At the same time, companies that invest in AI literacy unlock faster workflows, higher-quality output, and a stronger competitive edge.

Here are some of the key benefits for organizations of having AI literacy in place:

- Avoiding costly mistakes – prevention of data leaks and flawed decisions by teaching employees and relevant staff how AI tools store and process information.

- Boosting productivity – enabling teams to complete tasks faster and with higher quality by consciously and knowingly integrating AI tools into daily workflows.

- Driving innovation – fostering a culture where employees feel confident experimenting with new AI-powered solutions and processes.

- Strengthening compliance – ensuring employees understand how to use AI in line with privacy and other relevant regulations and ethical standards.

- Doing more with less – achieving greater output and efficiency without increasing headcount, by leveraging AI capabilities.

FAQ for Setting Up AI Literacy Training in Organizations

1. Should the organization measure the employee’s knowledge level?

The AI Act doesn’t require formal obligation to measure the knowledge of AI of employees, but AI providers and deployers must make sure employees have a sufficient level of AI literacy, considering their technical knowledge, experience, education, and training.

2. Should the organization’s AI literacy approach be risk-based?

Yes. Organizations should tailor their AI literacy efforts based on their role (provider or deployer) and the risk level AI systems they provide and/or deploy. High-risk AI systems require more robust training to ensure employees understand how to interact with such AI systems and/or how to manage and mitigate associated risks.

3. Is AI training mandatory, or are other initiatives acceptable for organizations? What should be the format of such training?

Formal training isn’t strictly mandated, but relying only on user manuals or basic instructions is not enough. Organizations must provide appropriate training or guidance based on staff roles, knowledge levels, and how AI is used. For high-risk AI systems, additional measures are required to ensure employees are fully prepared and human oversight is maintained. Regarding the format of such training, there’s no one-size-fits-all approach. The AI Office doesn’t mandate specific training; requirements depend on the specific context.

4. Can staff with AI-related degrees or AI development experience be considered AI literate without further action?

Not automatically. Their knowledge must still be assessed in the context of the specific AI systems used and their specific qualification.

5. Are organizations required to appoint specific roles or obtain specific certificates to comply with Article 4 of the AI Act?

No. Article 4 does not require formal roles like an AI officer or AI governance board, nor does it mandate any specific certificates. Organizations can meet compliance by maintaining internal records of training and other AI literacy efforts.

6. How often should AI literacy efforts be updated or reassessed?

AI literacy should be reviewed periodically, especially when new AI systems are introduced, major updates occur, or AI incidents highlight knowledge gaps. Regular reassessment ensures that staff remain equipped to handle evolving AI technologies and risks. At Whisperly, we recommend annual training as a minimum.

7. What are the potential penalties if an organization fails to comply with the AI literacy obligation under Article 4 of the AI Act?

Organizations that do not comply with Article 4 of the AI Act may face penalties or enforcement measures imposed by national market surveillance authorities. These authorities, designated by each Member State, must be appointed by 2 August 2025, and will start with the supervision and enforcement of this obligation as of 2 August 2026.

National market surveillance authorities can impose penalties and other enforcement measures to sanction infringements of Article 4. This will be based on national laws that Member States are due to adopt by 2 August 2025. Although the exact penalties may vary across jurisdictions, enforcement will follow a proportionate approach, considering the nature and gravity of the infringement, as well as whether the non-compliance was intentional or due to negligence.

Sanctions are more likely in cases where incidents occur that can be linked to insufficient AI training or guidance of employees or other people operating AI systems. Therefore, maintaining clear records of AI literacy efforts and proactively addressing AI training gaps is essential not only for compliance but also for minimizing potential liability.

Where to Start with AI Literacy in Your Organization?

Understanding and planning for AI literacy follow the same principles as other training and development efforts. It starts with identifying who needs what, adapting learning goals to specific roles, and defining clear performance indicators.

While there’s no single template, organizations can build their approach depending on how widely AI is used and what risks are involved. The key is to shape the strategy around actual needs, recognizing that effective AI literacy must be tailored, not generic.

In the following section, we outline a step-by-step approach to help organizations assess and develop AI literacy aligned with their specific context and needs.

Phase 1: Determining Organisational Position in the AI Chain

An initial task is to establish whether the organization qualifies as a provider, a deployer, or both, and whether any AI-related functions are carried out by third parties on their behalf, or conversely, whether it operates on behalf of another entity.

Given the complexity of corporate structures, this clarification is not always straightforward. A structured review process is therefore essential to identify internal and external roles connected to the development or use of AI systems. This forms a critical foundation for both AI literacy planning and broader AI governance, including the development of internal policies such as an AI Policy.

Phase 2: Mapping Roles Relevant to AI Interaction

Following the clarification of the organisation’s position in the AI ecosystem, the next phase involves identifying all internal and external roles that engage with or are impacted by AI systems. This forms the basis for designing AI literacy initiatives aligned with the specific responsibilities and risk exposure of each group.

A comprehensive role-mapping process should account for varying degrees of involvement across functions, including executive leadership, technical personnel (e.g., developers and data scientists), operational teams, legal, compliance, risk, and procurement roles, the general workforce, and third-party actors using AI on behalf of the organization. Accurate role identification ensures that AI literacy efforts are not generic but appropriately adapted to each function’s exposure, authority, and potential risk.

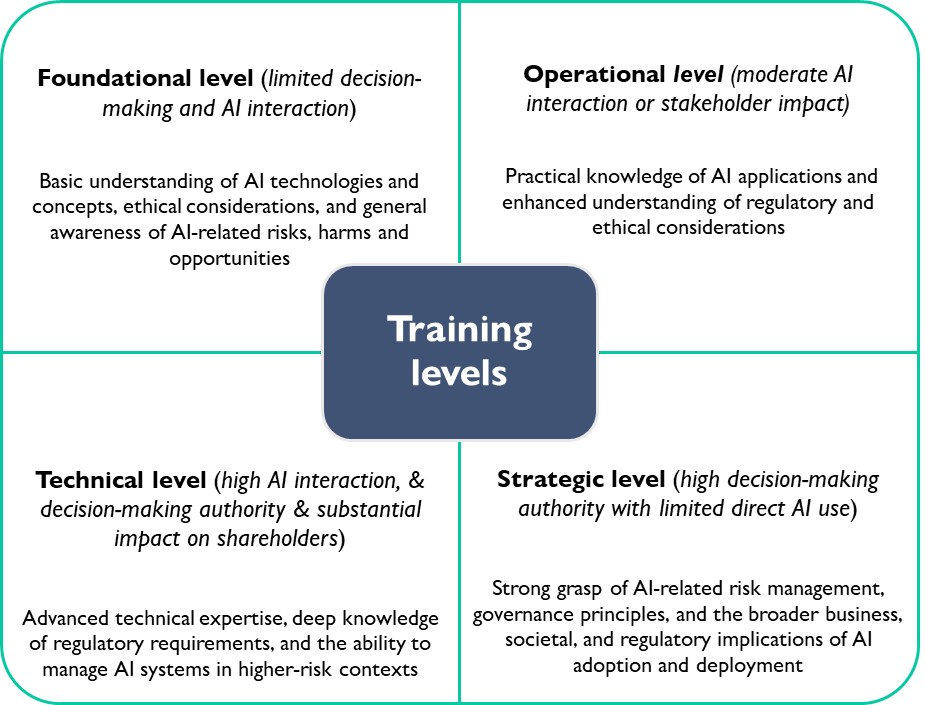

Phase 3: Assigning Training Levels Based on Risk Exposure

Once roles have been mapped across the organization, the next phase involves assigning them to appropriate AI literacy training levels. This should follow a risk-based logic, considering each role’s degree of interaction with AI systems, its influence over decision-making, and the potential impact on stakeholders.

Mapping these role clusters early allows AI training to be tailored to responsibilities, risk levels, and decision-making power. In AI-light environments, reverse mapping, from AI systems to users, can speed up the process.

Phase 4: Tailoring AI Literacy to Organizational Context

AI literacy programs should reflect the unique context of each organization, including its industry and regulatory obligations. While general AI training can address core topics like ethics, risks, and legal obligations, the content must be tailored to individual roles. For example, senior executives may need to understand how AI aligns with strategic goals and governance expectations, while technical teams require deeper knowledge of AI system design, model risks, and compliance requirements.

Creating customized training programs based on the specific duties and risk exposure of each role allows organizations to equip their teams with the relevant skills and understanding needed to use AI systems competently and ethically.

Phase 5: Continual Monitoring and Adjustment

AI literacy is an evolving requirement. As AI technologies and regulatory expectations shift, so must the competencies of those interacting with them. Organizations should implement ongoing AI evaluation mechanisms, such as regular feedback cycles, training refreshers, and periodic reviews or audits, to ensure AI literacy remains aligned with current needs. It’s also advisable to establish clear triggers for reassessment, including AI-related incidents, system updates, or the introduction of higher-risk AI applications.

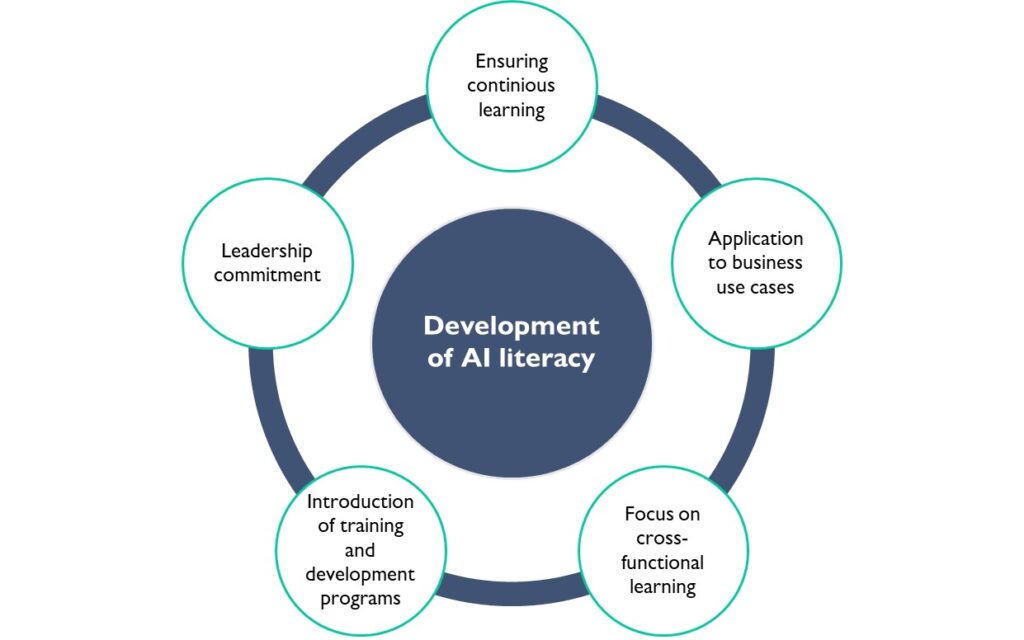

The Core Pillars of an AI Literacy Framework

To build a truly AI-literate workforce, organizations must look beyond general awareness and embrace a well-rounded AI framework that supports practical, responsible, and informed AI use. AI literacy in the business context rests on three interconnected pillars: technical understanding, practical application, and ethical awareness. Together, these components empower teams to adopt AI tools with confidence, discernment, and accountability.

1. Understanding How AI Works

This pillar focuses on essential AI concepts, such as machine learning, natural language processing, algorithms, and the processes by which AI systems are trained on data. This involves understanding the basic principles of how AI works. It’s not about coding or deep technical mastery but about understanding the logic and fundamental principles behind AI.

Such foundational knowledge enables employees to comprehend why AI behaves in specific ways, why data quality is crucial for reliable AI outputs, and why AI is not a definite solution but a tool with specific operational characteristics. Furthermore, this prevents unrealistic expectations and reduces the likelihood of misuse.

2. Applying AI in the Workplace

This pillar centers on the practical ability to work with AI tools in real business contexts. It’s not enough to understand what AI is; employees must know how to use it productively within their roles. This includes selecting the right AI tools, integrating them into workflows, and critically assessing when and how AI adds value.

Applied capability means confidently engaging with AI for real tasks: a marketing team drafting content with generative AI, a data analyst spotting trends with AI-driven insights, or a manager using AI to automate scheduling or reporting. It also requires knowing when not to use AI, recognizing its limitations, and ensuring human oversight where necessary.

By mastering this pillar, organizations can unlock the true potential of AI – greater speed, efficiency, and innovation – while ensuring its use remains purposeful and aligned with business goals.

3. Ethical Awareness

AI uses more than operational know-how; it requires ethical clarity. This pillar is about cultivating awareness of the broader implications AI can have on individuals, organizations, and society. It emphasizes critical themes such as bias in data and algorithms, manipulative technologies like deepfakes, data privacy, transparency in automated decision-making, and the potential for unintended harm.

Responsible judgment equips professionals to ask the right questions: Is this AI system fair? Could it disadvantage certain groups? Are we transparent about how decisions are made? For leaders, it’s also about safeguarding organizational integrity, avoiding ethical missteps that can damage reputation, invite legal risk, or erode customer trust.

By embedding this ethical lens into AI literacy, organizations can foster a culture where AI is used not just effectively, but also conscientiously, grounded in accountability, fairness, and public trust.

The evolving nature of AI literacy

AI literacy is a continuously evolving concept that grows alongside advances in technology. As AI systems become more sophisticated and widespread, including the rise of general-purpose AI (GPIA) that can be applied across diverse tasks and sectors, the definition of AI literacy will continue to expand. It will involve an understanding of not only the functionality and risks of specific AI tools but also the broader implications of increasingly flexible and autonomous AI models. Continuous learning and adaptability will be key to staying AI literate in the future.

Rather than treating AI literacy as a standalone compliance obligation, it should be embedded into broader AI governance, AI risk management, and AI learning strategies. This integrated approach supports ongoing alignment with regulatory frameworks such as the AI Act, while also strengthening internal capabilities and promoting a culture of responsible innovation.

Proactive investment in AI literacy enables organizations to navigate complexity, manage risk, and remain competitive in a rapidly shifting landscape. It is not simply about compliance; it is about readiness, resilience, and ensuring that AI is leveraged in ways that are ethical, effective, and aligned with long-term organizational values.