Artificial intelligence is becoming an operational constant across industries, increasingly integrated in critical business functions and decision-making processes. Even if the company does not “officially” use or develop AI tools, some form of AI is likely already integrated into its daily operations through ChatGPT, automated decision-making systems, data forecasting tools, or similar technologies.

But, as AI becomes more embedded in enterprise workflows, the range and complexity of associated risks increase, particularly in areas such as data privacy, algorithmic bias, and regulatory compliance.

Without a clear AI governance framework, companies face growing exposure that can undermine trust, create legal vulnerabilities, and disrupt core operations. Preparing an AI Policy is a critical step toward mitigating these risks, laying the foundation for responsible AI use, ensuring organizational accountability, and positioning the company as a forward-thinking leader.

If you’re tasked with drafting an AI Policy for your organization and aren’t sure where to start, this guide provides a practical, business-oriented starting point.

Content

Understanding the Concept of an AI Policy

What is AI Policy?

A policy in artificial intelligence can have different meanings depending on the context, most commonly referring to an AI Ethics Policy (guidelines for responsible development and use of AI systems), an AI Governance Policy (legal and regulatory frameworks for AI development and use), an AI Decision-Making Policy (strategies used in machine learning), or an AI Internal/Organizational Policy (internal company rules for managing AI systems). In this blog, the focus is specifically on the AI Organizational Policy (hereinafter referred to as: “AI Policy”), which outlines how AI systems should be managed within the company.

AI Policy serves as a roadmap for how a company develops, uses, and monitors AI systems, ensuring that AI practices align with applicable laws and ethical standards and that employees’ actions align with the company’s core values and strategies.

Representing the company’s formal commitment to responsible AI development and deployment, the AI Policy helps maximize AI benefits while mitigating risks such as bias, data breaches, and legal complications. Since each company has a unique structure, objectives, and risk profile, its AI Policy must be tailored to reflect its specific context and responsibilities in managing AI systems.

As a high-level document, the AI policy sets the “rules of the house” within the company by establishing a standard of responsibility through clearly defined objectives, principles, and safeguards for the development and use of AI systems. For this reason, the AI Policy is generally recommended as the first step in building a solid AI governance framework.

While drafting a standalone AI Policy isn’t the only option, some form of AI governance is essential. Depending on how the company engages with AI, this typically results in one of two paths: updating existing policies if AI use is banned or substantially limited, or creating a dedicated AI Policy if AI is actively adopted and permitted, especially in areas like decision-making, data processing, or customer interaction.

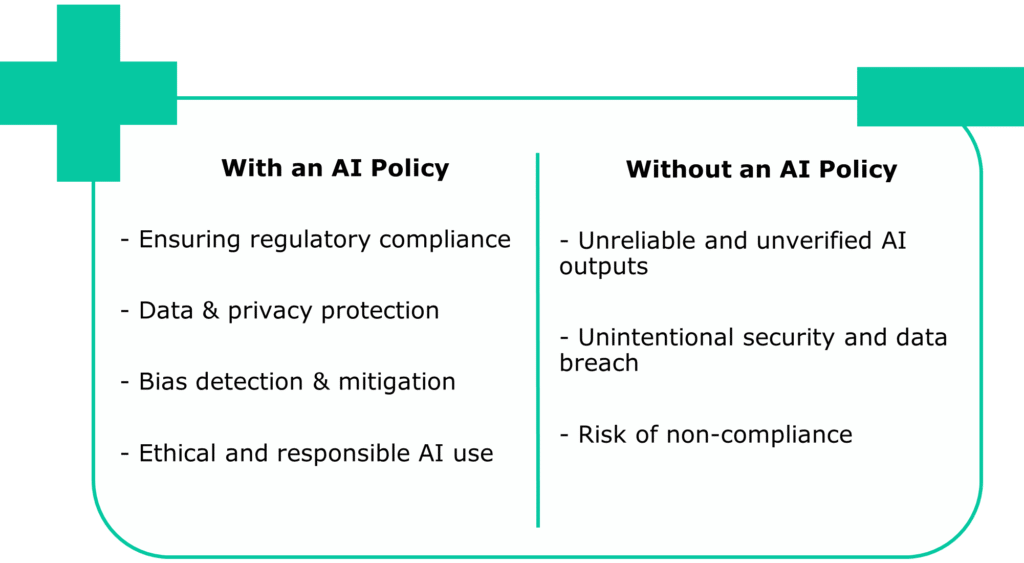

Strategic Benefits of Implementing and Risks of Lacking an AI Policy

Before implementing an AI Policy, a company should weigh both the potential benefits and the risks of operating without one, recognizing the strategic value of a well-written AI Policy and the importance of informed, responsible decision-making in an AI-driven landscape.

The key reasons a company should consider adopting an AI Policy include:

- Ensuring regulatory compliance – an AI Policy helps ensure that the company’s AI practices stay within legal boundaries, protecting it from compliance risks as regulations like GDPR, HIPAA, and emerging AI-specific legislation such as the EU AI ACT

- Data & privacy protection – AI technologies, particularly generative AI tools, operate by processing large volumes of data, and in the absence of clear safeguards, employees could inadvertently share proprietary or personal data with AI systems, thereby increasing the potential for unauthorized access or data compromise. An effective AI Policy sets clear protocols for data collection, storage, sharing, and processing to ensure privacy is well protected

- Bias detection & mitigation – since AI systems inherently reflect the quality and objectivity of the data they are trained on, when training data contains embedded biases, it can result in outputs that unintentionally discriminate against individuals based on attributes such as gender, race, or age. A well-crafted AI Policy mandates periodic evaluations and bias reviews of AI outputs

- Ethical and responsible AI use – an AI Policy establishes a clear framework for ethical AI use, guiding its deployment in a way that supports the company’s workforce and reputation, while also defining limits on AI’s role in decision-making to prevent unethical outcomes and reputational risks.

The key risks a company may face without an AI policy include:

- Unreliable and unverified AI outputs – the term “intelligence” in AI is misleading, as these systems operate on data and algorithms, not intelligence. Generative AI tools are trained on vast public datasets and generate responses based on statistical likelihood, not factual accuracy. As a result, the produced content can be false, partially correct, or biased, and may even reflect harmful stereotypes. Without an AI Policy, there are no established checks in place to identify issues early and minimize damage.

- Unintentional security and data breach – without clear guidance, employees may unknowingly expose sensitive information by using public AI tools. For example, entering private customer data into the AI systems can result in unintended disclosure or long-term data retention outside the company’s control. An AI Policy mitigates these risks by formally defining permitted AI tools, outlining acceptable use, and setting boundaries for data handling.

- Risk of non-compliance – without an AI policy, it becomes significantly harder to keep up with evolving laws and regulations, and this lack of structure increases the risk of non-compliance, legal exposure, and regulatory penalties as obligations grow more complex.

Key Questions a Company Must Address Before Drafting an AI Policy

Before drafting an AI Policy, a company must take a step back to assess the environment it operates in, the AI tools it relies on, the values it upholds, and the risks it faces, recognizing that an effective AI Policy is not merely a formal document, but the product of careful preparation, including clear goals, capable teams, and shared accountability.

The questions below are designed to help organizations assess whether they are truly ready to define how AI should be used, governed, and understood.

a. Has the company aligned on what qualifies as AI within its operations?

The company must first align on how it defines AI, determine which systems and tools fall within that definition, and identify where they are already integrated into its operations.

b. Has the company established a working group and educated the board on AI?

To effectively guide the company’s approach to AI, a working group should be established to lead the drafting of the AI Policy, bringing together the necessary expertise and representation from relevant departments and stakeholders.

Simultaneously, targeted education on key risks, such as bias, privacy, and workforce impact, is essential to ensure that board members are prepared to make informed and responsible decisions.

c. Has the company clarified why it needs an AI Policy and what it aims to achieve?

Before drafting an AI Policy, the company must define its primary objectives in adopting an AI Policy, i.e., what the AI Policy is meant to enable, protect, or prevent. These objectives might include ensuring safe and approved AI use, improving internal efficiency, etc. A clear understanding of these goals will help shape permitted AI tools, acceptable AI use cases, and expectations for responsible behavior.

d. Has the company identified the key drivers behind its AI governance efforts?

A well-designed AI Policy should be based on tangible motivations, such as the expanding use of AI tools, heightened oversight from authorities, internal anxieties around inappropriate AI use, or the imperative to protect the company’s reputation. Recognizing these triggers ensures that the AI Policy is shaped by actual risks and opportunities and designed to support meaningful, actionable outcomes rather than abstract principles.

e. Has the company defined its ethical principles and evaluated the legal and regulatory landscape?

Before establishing rules for AI use, the company must first define the ethical principles and values that should shape the development and deployment of AI systems. At the same time, the company must understand the legal and regulatory landscape surrounding AI, including data protection laws, privacy regulations, and industry-specific guidelines. Aligning the AI Policy with these requirements is essential to ensure compliance and reduce potential legal exposure.

f. Has the company identified AI use cases and assessed potential risks?

To draft a meaningful AI Policy, the company must first gain a clear understanding of how AI will be integrated across its operations, i.e., who will use it, for which tasks, and in what contexts. Equally important is anticipating potential risks, such as bias, security vulnerabilities, or unintended AI outcomes, and establishing proactive safeguards and boundaries. By identifying both the opportunities AI presents and the exposures it entails, the company can create informed, practical rules for responsible AI.

g. Has the company clearly defined roles, responsibilities, and governance for AI?

For an AI Policy to be truly effective, the company must determine and communicate who is responsible for what. The company should define clear roles and responsibilities for those involved in developing, deploying, and overseeing AI systems to ensure informed decision-making, effective risk management, and ethical alignment throughout the AI lifecycle. Just as critical is providing these stakeholders with the necessary knowledge and skills through appropriate AI training.

h. Has the company planned continuous monitoring and evaluation of AI systems?

Before establishing an AI Policy, the company must consider how it will ensure the AI Policy remains effective over time. AI Policies cannot be static; they require a framework for ongoing monitoring of AI system performance, detecting unintended effects, and assessing continued alignment with ethical standards. Building feedback loops and periodic reviews from the outset is essential to ensure the long-term relevance, reliability, and accountability of both AI systems and the AI Policy that governs them.

i. Has the company planned how it will communicate its AI Policy?

As the company prepares to develop its AI Policy, it should also plan how the AI Policy will communicate, ensuring it is clear, practical, and accessible. The AI Policy should be written in understandable language, provide actionable guidance, and be shared through internal channels such as company-wide emails and training sessions.

Pre-Drafting AI Policy Checklist for Companies

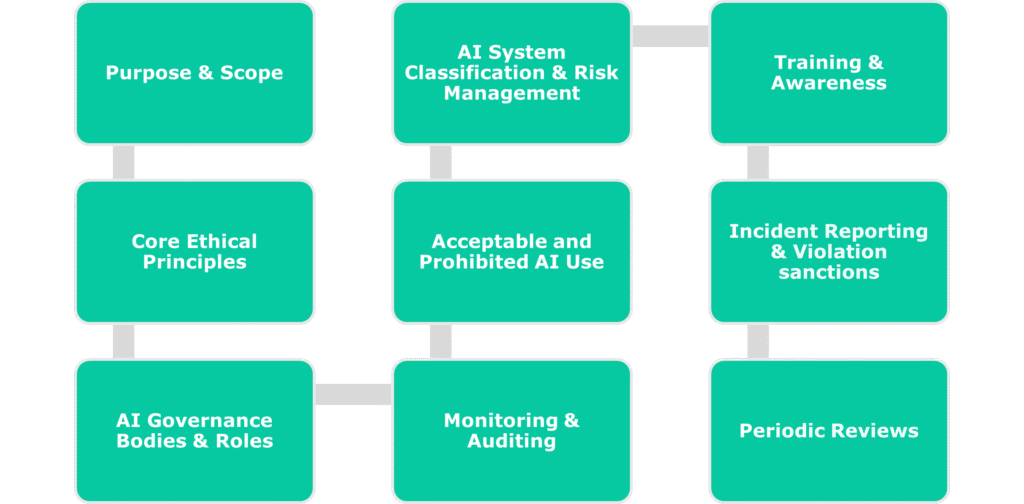

Core Elements of an AI Policy

A well-written AI Policy, while tailored to each organization’s specific context, objectives, and stakeholders, should always include certain essential principles and implementation guidelines to ensure responsible and effective use of AI.

The following components represent the foundational elements that every AI Policy should address:

1) Purpose & Scope

An AI Policy typically begins with an introductory section outlining the company’s commitment to the establishment of guidelines and best practices for responsible and ethical use of AI in a way that reflects the company’s values, legal requirements, ethical standards, and commitment to stakeholder well-being. It should be clearly stated why the AI Policy is being created and what it aims to achieve, ensuring alignment with the company’s business goals and objectives. Furthermore, the range of AI systems and activities to which the AI Policy applies within the company should be defined, as well as the individuals to whom the AI Policy applies.

2) Organization’s Core Ethical Principles

To ensure that the company upholds social responsibility and maintains public trust, the essential rules and guiding principles that define how AI is expected to be used within the company shall be defined. This section forms the operational core of the AI Policy by translating ethical values and obligations, often aligned with frameworks such as those from the OECD, UNESCO, or the HLEG on AI, into actionable governance areas that reflect the organization’s specific values. Therefore, they strengthen the internal company culture and ensure that AI practices are guided by good intentions and aimed at trustworthy AI systems.

The following are examples of operational principles that can be implemented:

- Responsible AI use – what constitutes ethical and responsible use of AI systems within the company.

- Compliance with laws and regulations – specification of all applicable international, national, and industry-specific legal frameworks with which the use of the AI system must comply, such as data protection, privacy, consumer rights, IP laws, etc.

- Transparency and accountability – establishment of the employees’ transparency obligation relating to the use of AI in their work, as well as their responsibility for the AI outputs, including the ability to explain and justify AI-driven results. The AI systems used shall be transparent in their operation, and the responsibilities for overseeing AI systems should be defined. A centralized system for AI governance and compliance efforts can be established to ensure comprehensive transparency across the company’s proposed and active AI activities.

- Data privacy & security – establishment of rules for handling of personal and sensitive data in the context of AI. Introduction of strict adherence to company data protection policies as well as security policies, ensuring that all personal and/or sensitive data used in AI systems is properly anonymized, securely stored, and processed in a manner that upholds privacy and regulatory compliance.

- Safety, security, robustness – establishment of protection of AI systems against external attacks, including safety mechanisms throughout their lifecycle, and ensuring they function appropriately even under adverse conditions.

- Bias, Fairness, Equity & Justice – the company’s commitment to monitoring, detecting, and mitigating biases in AI systems through employees’ active assessment of AI outputs and ensuring that AI systems do not result in discriminatory or exclusionary outcomes.

- Human-AI collaboration – clarification of the role of human oversight and the limitations of relying on AI-generated recommendations.

- Third-party services – establishment of ethical standards and legal requirements for the utilization of third-party AI services or platforms.

3) AI Governance Bodies & Roles

Establishment of dedicated AI governance structures, including the definition of executive roles and the assignment of clear responsibilities, to ensure the responsible development and deployment of AI systems. A broader AI governance strategy may also be developed, which defines a high-level board or steering committee along with their roles and responsibilities.

4) AI System Classification and Risk Management

All AI systems that are or will be in use should be defined according to their intended use and risk classification. Permitted, restricted, and prohibited AI systems within the company should be defined and justified. The AI Policy should outline how the company will identify, monitor, and mitigate AI risks and ensure that AI systems are regularly tested for accuracy and reliability, and that any risks are proactively addressed.

Specification of the acceptable AI use cases within the company, as well as the clear definition of the roles AI will play and delineation of activities strictly prohibited in AI usage, such as conducting political lobbying, categorizing individuals based on protected class status, or entering sensitive information into AI systems.

6) Continuous Monitoring and Auditing

Establishment of regular audits and monitoring processes to assess the performance, accuracy, and fairness of AI systems and stipulation of the necessary adjustments to be made based on audit findings.

7) Training and Awareness

Identification of training requirements to ensure users of AI systems understand risks, responsibilities, and ethical considerations, and introduction of ongoing education programs. The AI Policy should make clear that each member of staff is responsible for keeping up to date with the AI Policy.

8) Incident Reporting

A reporting process for violations or potential concerns related to AI use, includinthe g establishment of channels for reporting, confidentiality measures, and protections against retaliation. The processes in case of an AI incident should also be defined.

9) Violation of AI Policy

Definition of the consequences for violations of the AI Policy, including disciplinary actions that may involve termination of the employment agreement and potential legal consequences.

10) AI Policy Review

Definition of a regular schedule for reviewing and updating the AI Policy to ensure adherence to the AI Policy and identify potential risks. Furthermore, the AI Policy owner or committee responsible for ensuring the AI Policy’s relevance should be appointed.

AI Policy Implementation

The successful implementation of an AI Policy requires a structured, multi-phase approach that ensures both compliance and practical integration across the company.

The following steps outline a recommended implementation process:

Step 1:

AI Policy Development with Cross-Functional Input – start by drafting the AI Policy following the previously outlined steps. To ensure it reflects both operational realities and ethical standards, involve a diverse team of stakeholders from across the company, ideally through a dedicated body like an AI Governance Board, to provide relevant input. Once the AI Policy is drafted, it should be reviewed by legal experts to ensure compliance.

Step 2:

Organization-Wide Dissemination and Attestation – once finalized, the AI Policy should be communicated to all employees. To ensure accountability, each employee should acknowledge that they understand the AI Policy and agree to comply with its provisions.

Step 3:

Operational Integration and Procedural Alignment – following AI Policy adoption, managers and employees should collaboratively define concrete workflows and control mechanisms that align daily AI use with the AI Policy’s requirements. This includes developing team-specific procedures and decision-making protocols. This step transforms the AI Policy from abstract principles into practical, enforceable actions and ensures consistent AI Policy’s interpretation and application.

Step 4:

Continuous Evaluation and Iteration – treat the AI Policy as a dynamic document that evolves with technological advancements and organizational experience. Establish a routine AI Policy review cycle, leveraging audit findings, risk assessments, and operational feedback to refine and improve the AI Policy over time.

AI Policy is a Living Framework, not a One-Off Document

AI is a relatively new phenomenon that is evolving at a rapid pace. While its significance and applicability may vary depending on the industry or size of a business, no company can afford to ignore it. Rather than waiting for AI to “settle,” companies should take proactive steps today, such as:

- Determination of where AI can bring the most value to the business

- Assessment of the potential risks AI may introduce

- Development of a comprehensive AI Policy and

- Establishment of a designated AI governance authority.

However, even when a standalone AI Policy is developed, it is unlikely to be sufficient on its own. Existing internal policies, such as those related to data protection, IT security, and acceptable technology use, will almost certainly need to be revised to reflect the unique challenges AI brings.

That’s why AI Policy should not be seen as a one-off document, but rather as part of a broader legal and organizational ecosystem that evolves alongside the technology. Like AI itself, AI Policy must remain dynamic, living, adapting, and continuously governed to stay relevant and effective.

The Whisperly Library offers a collection of gold-standard AI governance policies, including a comprehensive AI Policy, available on a self-service basis. Each policy can be customized to fit your organization’s needs using our provided templates and AI-powered drafting tool.

Take the Fastest Path to

Audit-Ready Compliance

Build trust, stay on top of your game and comply at a fraction of a cost